Linux is hard to setup via the command line and most people coming from Windows would like a familiar interface to ease them into Linux. With the ever escalating price of cPanel I am putting together a list of cPanel alternatives that cover a range of functionality. This list is mainly for my research so I can pick the best GUI. I currently use CWP as it is the most comprehensive replacement for cPanel and is great for hobbyists.

My Reviews / Research

A lot of these panels are wrappers and read the configurations straight from the disk.

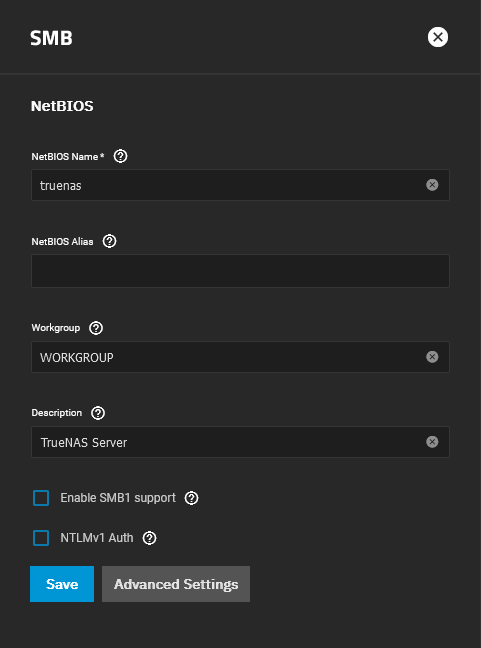

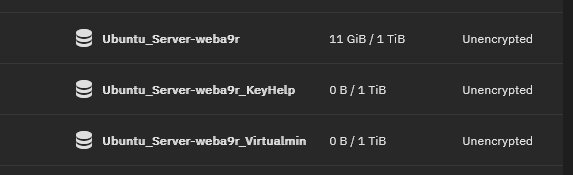

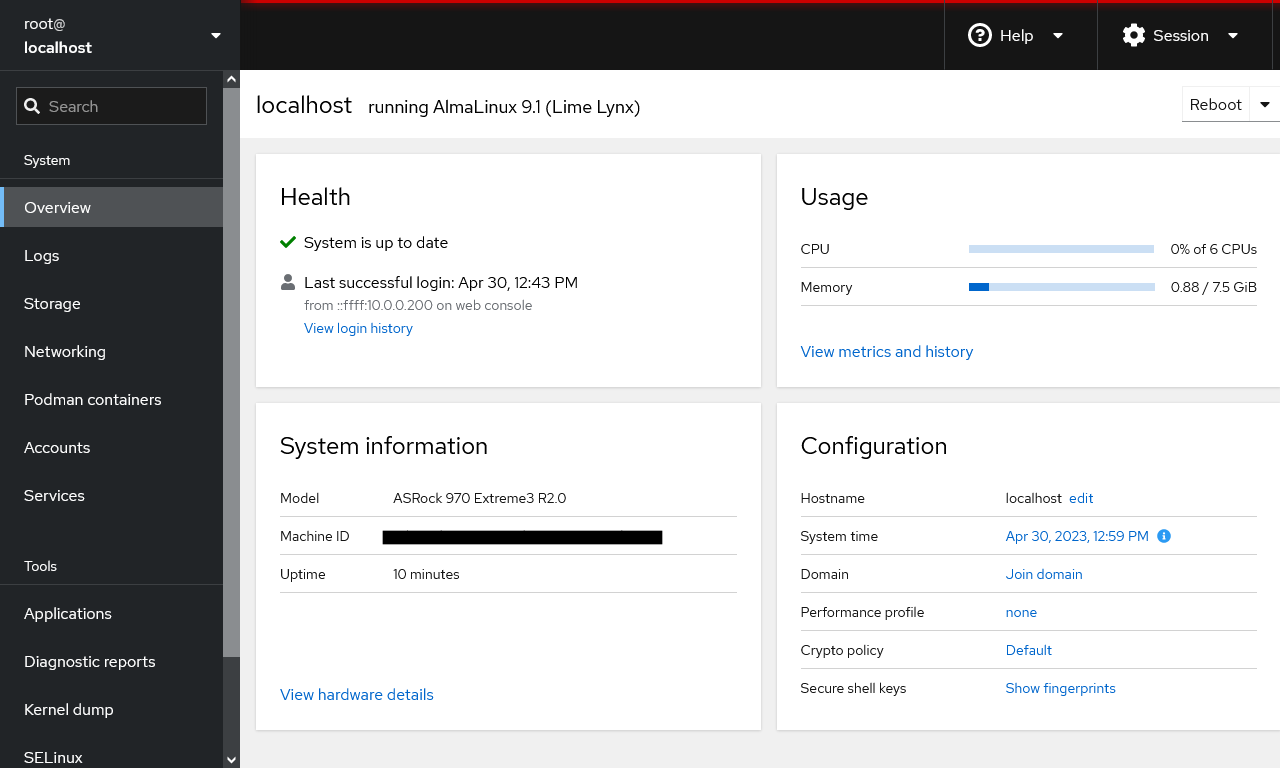

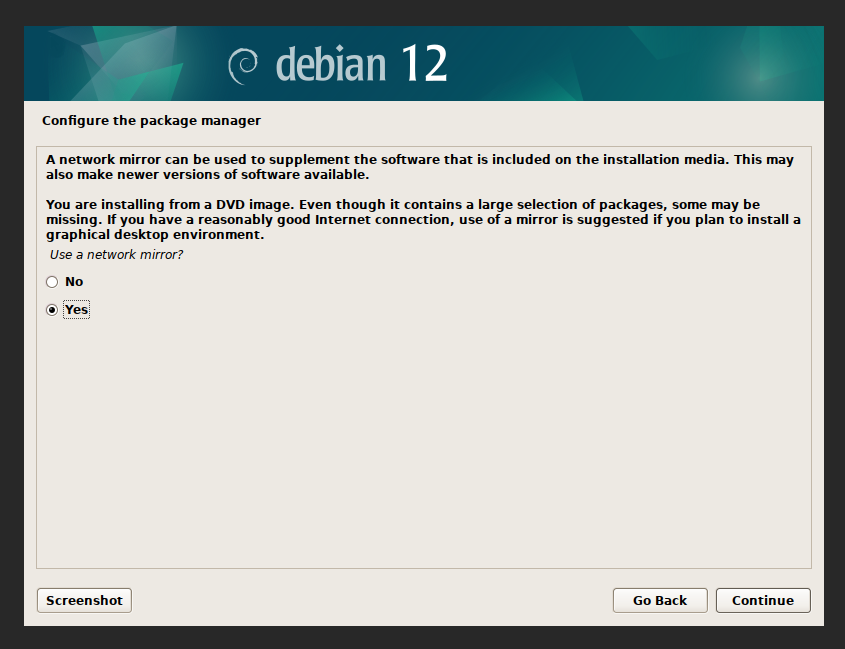

I will be using Ubuntu Server LTS (Minimal) with no extra packages except for OpenSSH. You can also use AlmaLinux Server (Minimal). Ubuntu has far more support across different software so is my preferred OS and unless specified use the real root account and not sudo.

Always use the Long Term Support (LTS) version of the OS you are going to use because you want stability and support for the software, bleeding edge shinies (features) are not needed.

It should be noted that while I assess what the software comes with natively if you understand Linux a lot of these panels can be extended manually with features they do not have or to turn on things that do not have a button in the panel.

Table of Contents

The Panels (in no particular order)

Other Stuff

The Shortlist (TL;DR)

This is my personal selection of panels that I would look at first. The other might suit you better so might still be worth looking at. The liss is roughly ordered to be Business+Paid first, down to Hobbyist+Free and you will pick the one that suits you best by starting at the top.

- Webuzo

- Cost: Paid

- Suitable for: Hosting Company, Small Hosting Company

- Notes:

- Ideal cPanel/Plesk replacement for business.

- From the company that makes Softaculous, a very nice looking panel, how cPanel should be.

- The price is great for hosting companies if you take out the unlimited package.

- My20i / StackCP

- Cost: Free (can only be used with 20i.com or their resellers)

- Suitable for: Hosting Company, Small Hosting Company

- Notes:

- Ideal cPanel/Plesk replacement for business.

- The price is great for hosting companies.

- Althought not a separate panel, this British SaaS offering should be considered.

- DirectAdmin

- Cost: Paid

- Suitable for: Hosting Company, Small Hosting Company

- Notes:

- Ideal cPanel/Plesk replacement for business.

- The price is great for hosting companies if you take out the unlimited package.

- Also check your requirements because of the paid add-ons model.

- Control Web Panel (CWP)

- Cost: Free + Paid

- Suitable for: Hosting Company, Small Hosting Company, Hobbyists

- Notes:

- KeyHelp

- Cost: Free + Paid

- Suitable for: Small Hosting Company, Hobbyists

- Notes:

- Virtualmin

- Cost: Free + Paid

- Suitable for: Small Hosting Company, Hobbyists

- Notes:

- The most Feature rich of the Free and some of the Paid panels.

- Because of the theme, the layout, the number of options and features, this is not suitable for the casual user or clients.

- myVesta

- Cost: Free

- Suitable for: Hobbyists

- Notes:

- This panel will get the job done and is actively being developed.

- HestiaCP

- Cost: Free

- Suitable for: Hobbyists

- Notes:

- This panel will get the job done and is actively being developed.

- Nice dark theme

- You can't use 'Apache only' mode any more with this panel.

Hosting Company

These panels are designed for companies selling hosting of any size, but can be used for hobbyists or techies running their own servers from home. These panels have all of the features required to sell hosting to end users. One key feature of these panels is that they have reseller accounts.

cPanel / WHM

| Features |

Status |

| |

|

| Primarily Designed For |

Hosting Company |

| Free/Paid |

Paid |

| License |

Proprietary |

| Supported OS |

CentOS 7 / RHEL 7 / CloudLinux 6,7,8 / AlmaLinux 8 / Rocky Linux 8 / Ubuntu (on cPanel/WHM version 102 and higher) |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache / NGINX |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt / Sectigo |

| DNS Server |

BIND / PowerDNS |

| DNS Zone Manager |

√ |

| DNSSEC |

√ |

| Multi-PHP |

√ |

| Database Server |

MariaDB / PostgreSQL |

| Database Admin |

phpMyAdmin / phpPgAdmin |

| Email Server |

Exim / Dovecot |

| Webmail |

Horde |

| FTP Server |

Pure-FTPd / ProFTPD |

| Caching |

OPcache / Memcached |

| |

|

| Email Validation |

SPF / DKIM / DMARC |

| Spam Protection |

SpamAssassin / Greylisting |

| Firewall |

iptables / CSF / cPHulkd |

| WAF |

ModSecurity / OWASP |

| Virus / Malware Scanning |

ClamAV / ImunifyAV / Imunify360 |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

√ |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

AWStats / Webalizer |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

FTP / AWS S3 |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

√ |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

Tiers based on number of accounts |

| Server and Package Updates |

GUI / CLI |

| Automatic Updates |

√ |

| Can be Uninstalled |

× |

cPanel is an industry leader and has everything you need for hosting and reselling. It is a web hosting control panel with a user-friendly interface and many features.

- Pros

- Complete hosting package.

- Auto updating.

- All features you will need for a modern hosting server.

- Cons

- Expensive.

- The more accounts, the more you pay.

Notes

- Sites

- General

- Settings

- Plugins

- File Locations / Repo Locations / Key Locations

- Install

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

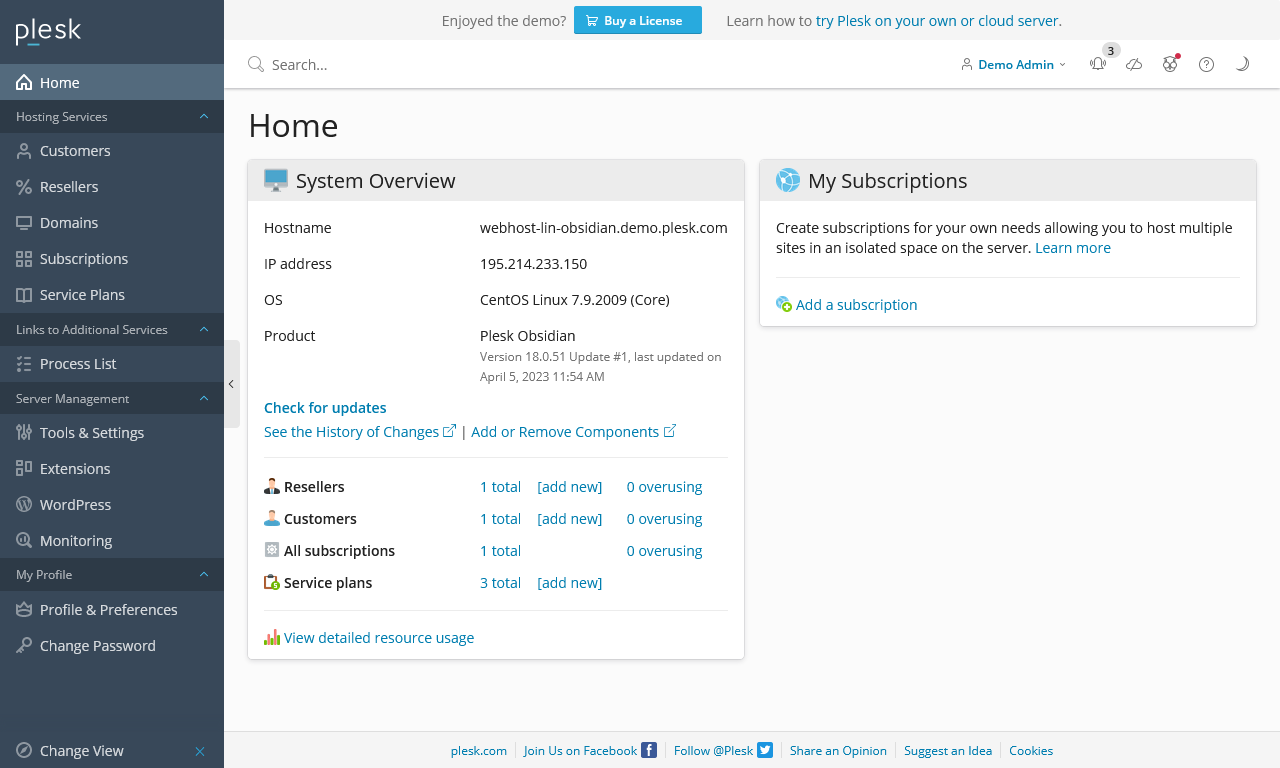

Plesk

| Features |

Status |

| |

|

| Primarily Designed For |

Hosting Company |

| Free/Paid |

Paid |

| License |

Proprietary |

| Supported OS |

Debian / Ubuntu / CentOS 7 / RHEL / CloudLinux / AlmaLinux / Rocky Linux / Virtuozzo Linux 7 / Windows / Windows Server |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache / NGINX / IIS |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt / Sectigo |

| DNS Server |

PowerDNS / BIND |

| DNS Zone Manager |

√ |

| DNSSEC |

√ |

| Multi-PHP |

√ |

| Database Server |

MariaDB / PostgreSQL |

| Database Admin |

phpMyAdmin / phpPgAdmin |

| Email Server |

Exim / Dovecot |

| Webmail |

Horde |

| FTP Server |

Pure-FTPd / ProFTPD |

| Caching |

OPcache / Memcached |

| |

|

| Email Validation |

SPF / DKIM / DMARC |

| Spam Protection |

SpamAssassin / Greylisting |

| Firewall |

Plesk Firewall / Firewalld |

| WAF |

Fail2Ban / ModSecurity / Atomic / OWASP / CWAF (Comodo) |

| Virus / Malware Scanning |

ClamAV / ImunifyAV / Imunify360 |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

× |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

AWStats / Webalizer |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

FTP / AWS S3 |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

√ |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

3 Tiers, Top one is unlimited |

| Server and Package Updates |

GUI / CLI |

| Automatic Updates |

√ |

| Can be Uninstalled |

× |

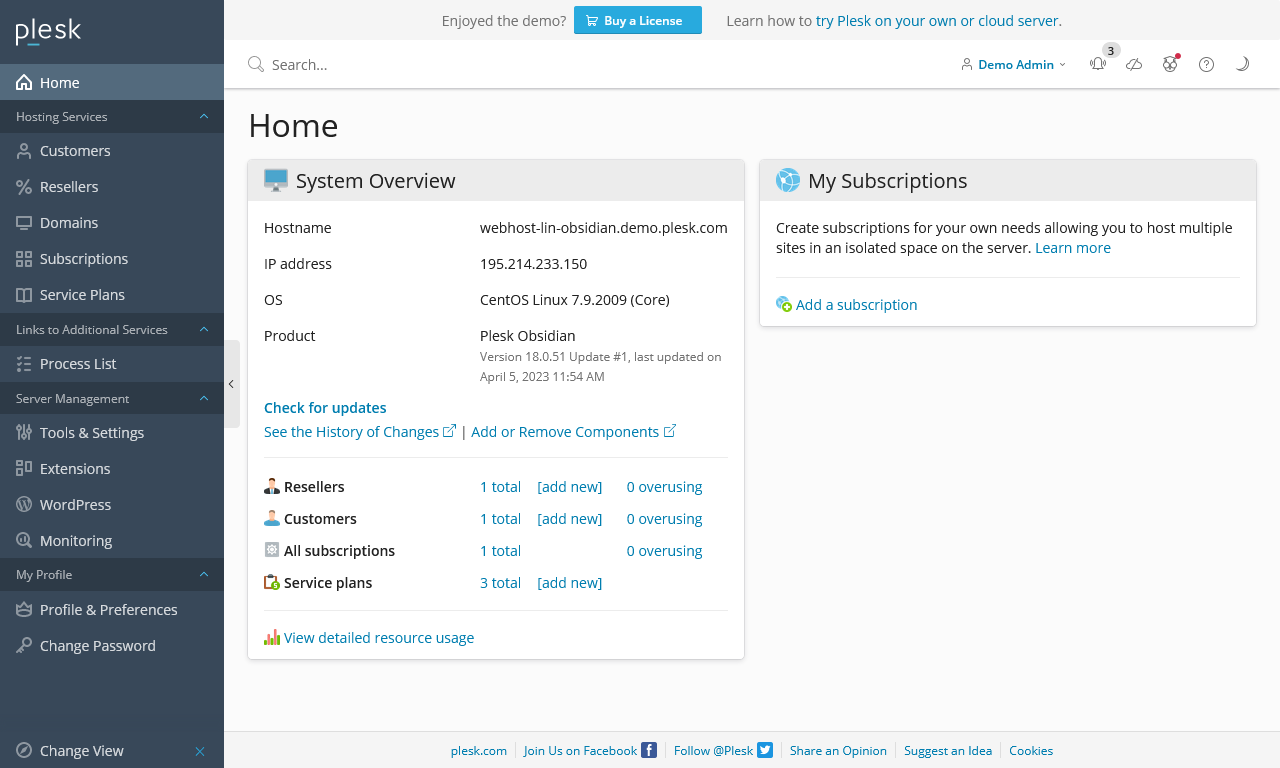

Plesk is an industry leader and has everything you need for hosting and reselling. It is a web hosting control panel with a user-friendly interface with many features.

- Pros

- Complete hosting package.

- Auto updating.

- All features you will need for a modern hosting server.

- Can run on Windows.

- Cons

Notes

- Sites

- General

- Software Requirements for Plesk Obsidian

- Read all about the software requirements, specifications and other important details to take full advantage of Plesk Obsidian.

- Full technology lists.

- Plesk Web Admin SE (Free Version) is only available on Vultr, DigitalOcean, AWS and Alibaba cloud platforms.

- Settings

- Plugins

- File Locations

- Install

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

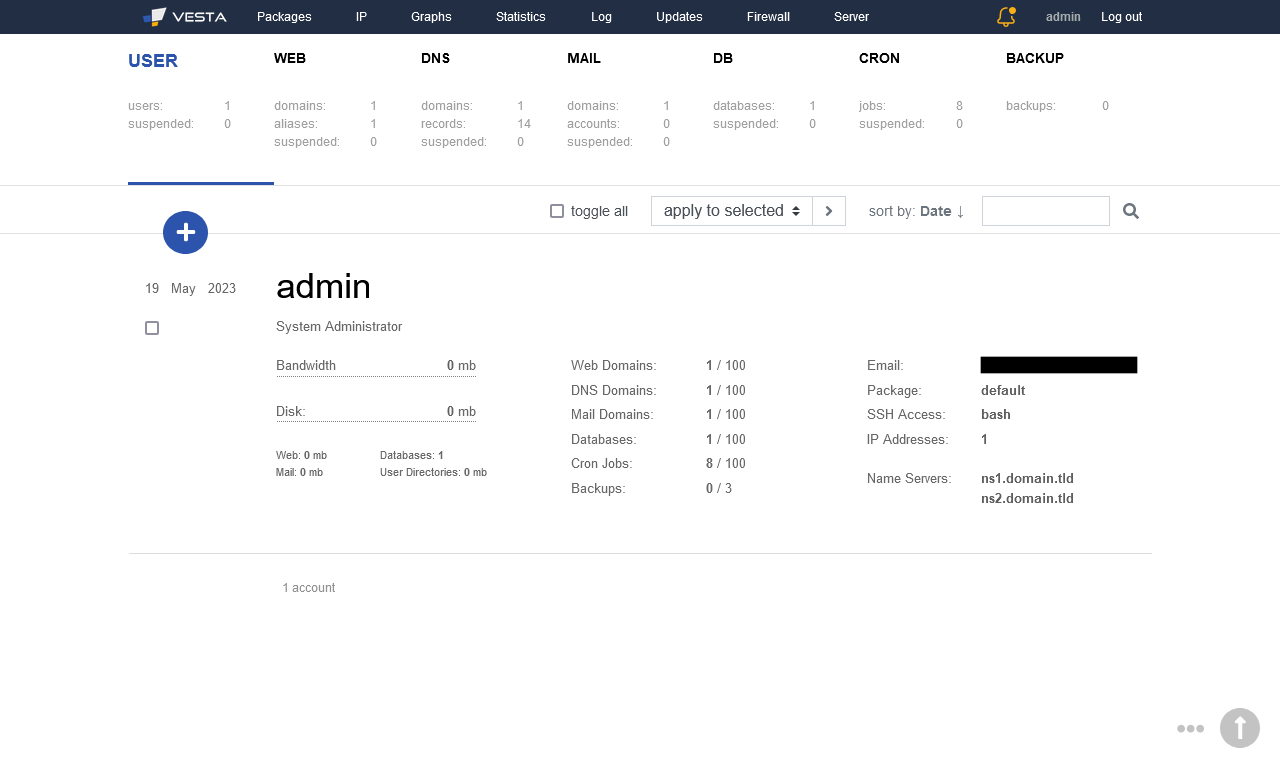

Control Web Panel (CWP)

| Features |

Status |

| |

|

| Primarily Designed For |

Hosting Company |

| Free/Paid |

Both |

| License |

Proprietary |

| Supported OS |

CentOS / CentOS 8 Stream / Rocky Linux / AlmaLinux / Oracle Linux |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache / NGINX / LiteSpeed Enterprise / Varnish |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND |

| DNS Zone Manager |

√ |

| DNSSEC |

× |

| Multi-PHP |

√ |

| Database Server |

MariaDB / PostgreSQL / MongoDB |

| Database Admin |

phpMyAdmin / PostgreSQL Database Manager / Mongo Database Manager |

| Email Server |

Postfix / Dovecot |

| Webmail |

Roundcube |

| FTP Server |

Pure-FTPd |

| Caching |

OPcache / Varnish |

| |

|

| Email Validation |

SPF / DKIM |

| Spam Protection |

SpamAssassin / SpamHause / SpamExperts / Amavis |

| Firewall |

CSF |

| WAF |

ModSecurity / CWAF (Comodo) / OWASP |

| Virus / Malware Scanning |

ClamAV / Maldet / RKHunter / Lynis / Snuffleupagus |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

√ |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

GoAccess |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

FTP |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

× |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

× |

| Server and Package Updates |

GUI / CLI |

| Automatic Updates |

Panel Only |

| Can be Uninstalled |

× |

Control Web Panel (CWP) is a free modern and intuitive control panel for servers and VPS that enables the day to day management and their security easy. Considerations were taken into account through the development of the web panel in terms of security functionalities and the interface.

- Pros

- Complete hosting package.

- All features you will need for a modern hosting server.

- It has great potential.

- The Pro version is so cheap it should be considered a donation and I would recommend to go straight for the Pro version

- Ideal cPanel/Plesk replacement for business, but great for hobbyists aswell.

- Cons

- Some bugs and are not fixed quickly.

- The Admin panel is dated.

- There needs to be a better road map for this software for it to live in the commercial world.

- Does require some work to setup and keep running.

Notes

- Sites

- General

- Settings

- Plugins

- File Locations / Repo Locations / Key Locations

- Install

- Update / Upgrade

- Installation Instructions

- Misc

DirectAdmin

| Features |

Status |

| |

|

| Primarily Designed For |

Hosting Company |

| Free/Paid |

Paid |

| License |

Proprietary |

| Supported OS |

RHEL / CentOS / AlmaLinux / Rocky Linux / Debian / Ubuntu |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache / Nginx / OpenLiteSpeed / LiteSpeed Enterprise |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND |

| DNS Zone Manager |

√ |

| DNSSEC |

√ |

| Multi-PHP |

√ |

| Database Server |

MySQL / MariaDB |

| Database Admin |

phpMyAdmin |

| Email Server |

Exim / Dovecot |

| Webmail |

Roundcube / SquirrelMail |

| FTP Server |

ProFTPD / Pure-FTPd |

| Caching |

Redis |

| |

|

| Email Validation |

SPF / DKIM / DMARC |

| Spam Protection |

SpamAssassin / Rspamd / RBL / Easy Spam Fighter / BlockCracking / Pigeonhole |

| Firewall |

iptables / CSF / Firewalld |

| WAF |

ModSecurity / Comodo WAF (CWAF) / Snuffleupagus |

| Virus / Malware Scanning |

ClamAV / Imunify360 (Paid Addon) |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

× |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

AWStats / Webalizer |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

JetBackup (Paid Addon) / Acronis (Paid Addon) |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

√ |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

Number of domains (not subdomains) depends on your tier |

| Server and Package Updates |

CLI / GUI |

| Automatic Updates |

√ |

| Can be Uninstalled |

× |

DirectAdmin is modern web hosting control panel with regular updates. I found its control panel to be uncomfortable to use because of the hidden menus and low contrast ultra-bright theme, ultraclean gone to far. The menu can be stickied which helps a lot and if I ever find where to enable the dark theme that would probably fix the theme. This panel is definately feature rich and for the price is ideal for hosting your own websites at home if you are willing to pay it, and for the hosting companies out there this is definately a good replacement for Plesk or cPanel.

- Pros

- Subdomains do not count toward the domain limit, so you could theoretically have unlimited subdomains.

- Updated often.

- DirectAdmin is widely supported like cPanel and Plesk.

- Integrated Ticketing System

- Modern and responsive UI

- Feature rich

- Has a GIT server option

- Reseller features

- Ideal cPanel/Plesk replacement for business.

- Cons

- They removed the `Personal Tier` so is a bit expensive to run at home.

- Not sure how much control over the Apache setup you have.

- The demo is crippled so you cannot look at most features.

- The default theme and menu settings are bad.

- Pricey unless you go for the unlimited plan which is only suitable for business.

- There seems to be a lot of paids add-ons that should be included, such as server level backup (JetBackup / Acronis). This might point to a business model that we have seen before.

Notes

- Sites

- General

- Settings

- Plugins

- File Locations / Repo Locations / Key Locations

- Install

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

Geek Panel

| Features |

Status |

| |

|

| Primarily Designed For |

Hosting Company |

| Free/Paid |

Free |

| License |

Proprietary |

| Supported OS |

CentOS / AlmaLinux / Rocky Linux / Debian / Ubuntu / Fedora |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

√ |

| Web Server |

Apache |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND |

| DNS Zone Manager |

√ |

| DNSSEC |

× |

| Multi-PHP |

√ |

| Database Server |

MariaDB / SQLite / PostgreSQL |

| Database Admin |

phpMyAdmin |

| Email Server |

Postfix / Dovecot |

| Webmail |

Roundcube |

| FTP Server |

VsFTPd |

| Caching |

Memcached |

| |

|

| Email Validation |

SPF / DKIM |

| Spam Protection |

Sending rate restriction |

| Firewall |

× |

| WAF |

× |

| Virus / Malware Scanning |

ClamAV |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

√ |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

Bandwidth |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

FTP / SCP |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

√ (seems to be planned) |

| API |

√ |

| WHMCS Support |

? |

| Panel Account Restrictions |

× |

| Server and Package Updates |

CLI |

| Automatic Updates |

? |

| Can be Uninstalled |

× |

Geek Panel (a.k.a. bbPanel) is a new control panel and this shows because although it looks very close to cPanel it still has bugs, is not feature complete, has no community or issue tracker. Too confuse things, there are several websites for this one product and they are all slightly different.

GeekPanel is built with security in mind, to meet everyone needs from Beginners to top Admin Professionals for web hosting control panel management. A smart scalable and secure hosting control panel a comprehensive Linux system for server admins, and a broad toolset for customers to manage shared web hosting, VPS, Cloud, and Dedicated Servers with their domains emails websites, with a ton of rich features.

- Pros

- It does have a small bank of installable platforms such as Joomla and WordPress.

- Has grest potential as a cPanel clone.

- Ideal cPanel/Plesk replacement for business.

- Cons

- Many bugs.

- Not feature complete.

- Confusing websites.

- You cannot raw edit the php.ini file in the GUI or a lot of other settings which are hidden from the admin.

Notes

- Sites

- Homepage

- Demo

- Changelog

- Code Repository

- Forum

- Docs

- Plugins

- General

- Settings

- Plugins

- File Locations / Repo Locations / Key Locations

- The panel files /geekpanel/

- Install

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

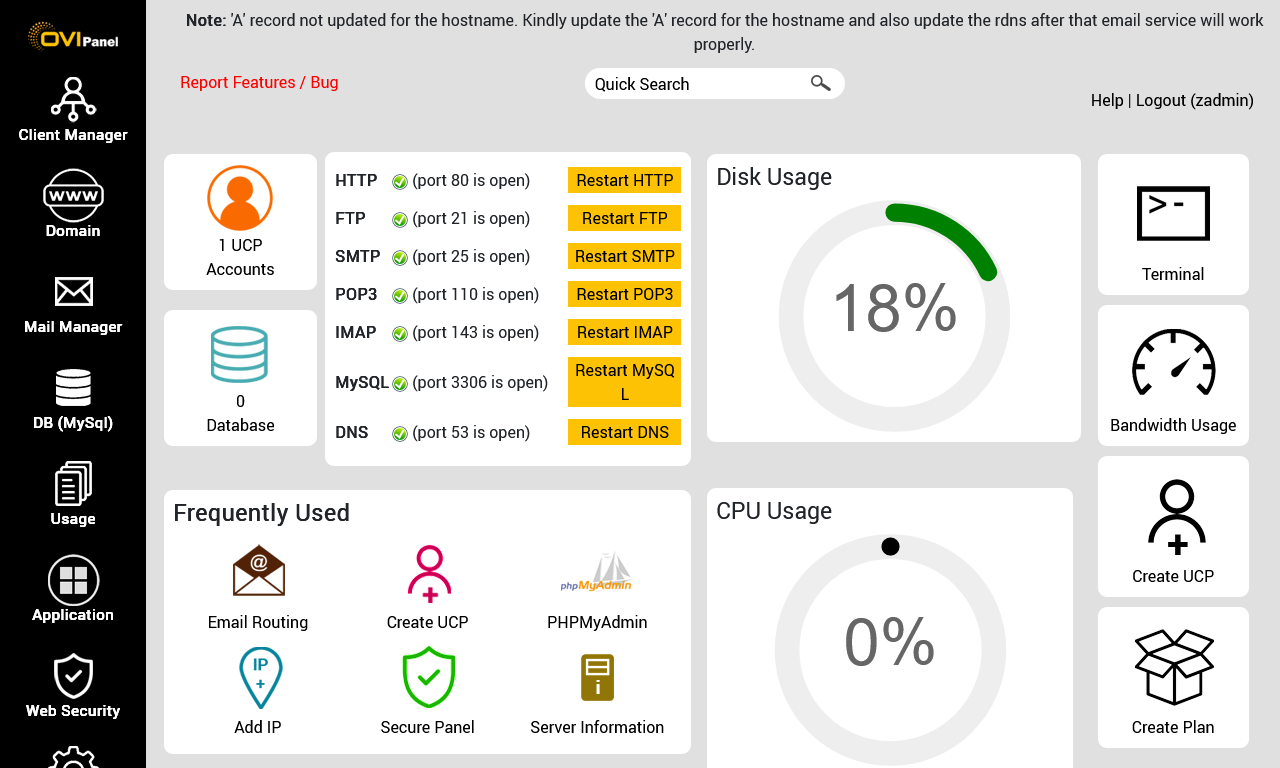

OVIPanel

| Features |

Status |

| |

|

| Primarily Designed For |

Hosting Company |

| Free/Paid |

Free |

| License |

Proprietary / GPLv3 / MIT / Apache |

| Supported OS |

CentOS / AlmaLinux / Cloud Linux |

| Supported Cloud Providers |

? |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache / Nginx / OpenLiteSpeed |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND |

| DNS Zone Manager |

√ |

| DNSSEC |

× |

| Multi-PHP |

√ |

| Database Server |

MySQL / PostgreSQL / MongoDB |

| Database Admin |

phpMyAdmin / phpPgAdmin |

| Email Server |

Postfix / Dovecot |

| Webmail |

Roundcube / RainLoop |

| FTP Server |

ProFTPD |

| Caching |

Varnish |

| |

|

| Email Validation |

SPF / DKIM |

| Spam Protection |

SpamAssassin |

| Firewall |

CSF |

| WAF |

ModSecurity |

| Virus / Malware Scanning |

Imunify360 / Linux Malware Detect (LMD) |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

√ |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

Webalizer |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

? |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

√ |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

× |

| Server and Package Updates |

CLI / GUI |

| Automatic Updates |

× |

| Can be Uninstalled |

× |

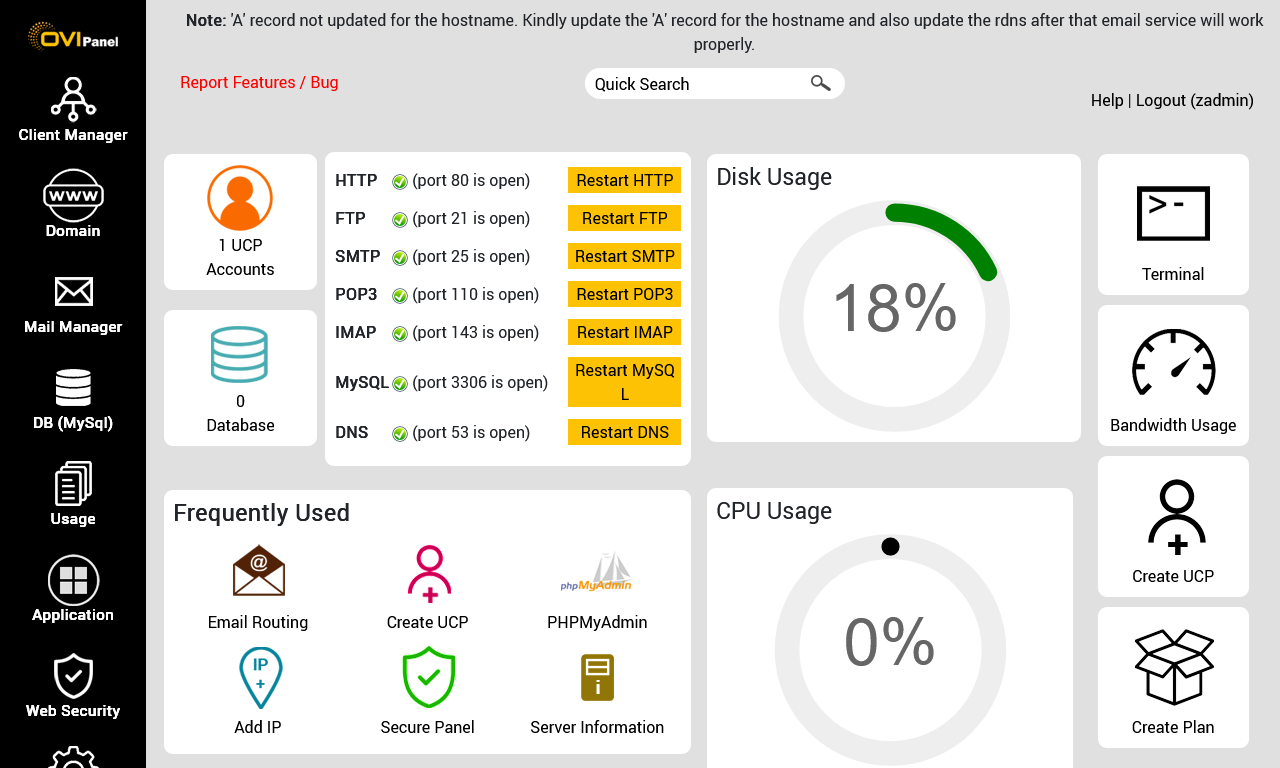

OVIPanel is web hosting control panel (based on Sentora) that has a modern UI with separate panels for users and the admin. The admin GUI does need some work on the UI and additional features adding because a lot of features that you expect to be in the GUI are absent. The free panel has massive potential and a growing community. This panel does have regular updates and is developed by a large hosting company in India.

There is a paid version which adds support but no extra features. The paid tiers are very reasonable and can give you some reassurance that you can get technical questions answered within 4-6 hours.

- Pros

- One Click cPanel to OVIPanel Migration.

- Cons

- Some of the documentation is out of date.

- The UI needs some tidying up

- Apache modules can be configured from the GUI but not all modules are availabe.

Notes

- Sites

- General

- Settings

- Plugins

- File Locations / Repo Locations / Key Locations

- Install

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

Webuzo

| Features |

Status |

| |

|

| Primarily Designed For |

Hosting Company |

| Free/Paid |

Paid |

| License |

Proprietary |

| Supported OS |

RHEL / CentOS / AlmaLinux / Rocky Linux / Ubuntu / CloudLinux / Scientific Linux |

| Supported Cloud Providers |

Amazon Web Services (AWS) / DigitalOcean / Google Cloud Platform (GCP) / Microsoft Azure / Akamai (formerly Linode) |

| Install Method(s) |

Script / Cloud Quick Launch |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache / Nginx / OpenLiteSpeed / LiteSpeed Enterprise / Lighttpd / NodeJS |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

√ |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND |

| DNS Zone Manager |

√ |

| DNSSEC |

× |

| Multi-PHP |

√ |

| Database Server |

MySQL / MariaDB / PostgreSQL / SQLite / MongoDB |

| Database Admin |

phpMyAdmin / phpPgAdmin |

| Email Server |

Exim / Dovecot |

| Webmail |

Roundcube / RainLoop / WebMail Lite |

| FTP Server |

Pure-FTPd |

| Caching |

Redis / Memcached / Varnish |

| |

|

| Email Validation |

SPF / DKIM / DMARC |

| Spam Protection |

SpamAssassin / RBL / MailChannels |

| Firewall |

CSF / CXF |

| WAF |

ModSecurity / OWASP / Brute Force Detection |

| Virus / Malware Scanning |

ClamAV / ImunifyAV / ImunifyAV+ / Imunify360 / Linux Malware Detect (LMD) / Linux Environment Security (LES) |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

√ |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

AWStats |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

FTP / SFTP / AWS S3 / Google Drive |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

√ |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

Tiers based on number of accounts |

| Server and Package Updates |

CLI / GUI |

| Automatic Updates |

√ |

| Can be Uninstalled |

× |

Webuzo is a Multi User Control Panel built for Hosting Providers, Resellers and Website Owners designed and built by Softaculous. Its a powerful and easy to use Web Hosting Control Panel used by users around the world which helps you manage your cloud or dedicated server.

This panel can be installed on dedicated servers, cloud servers and virtual private servers. You can launch Webuzo instances in various clouds like Amazon Web Services, Google Cloud Platform (GCP), Microsoft Azure, Linode, DigitalOcean, etc. You can automate various admin tasks with the API and SDK.

Webuzo now has reseller capabilites and is definately a candidate for a cPanel replacement. The top tier is a fair price which includes unlimited domains, unlimited accounts and free support but the lower tiers also allow the hobbyist to run this platform on their kit at home. The killer feature is that Webuzo offers and inplace conversion of your cPanel and WHMCS to use their platform with no losss of data and no need to performa migration to another IP address.

- Pros

- An excellent cPanel replacement.

- Has a tool to convert your cPanel server into a Webuzo server rather than doing a migration.

- Has a tool to convert your WHMCS (cPanel) to WHMCS (Webuzo).

- The 'Personal Cloud' tier has Softaculous included for free.

- The GUI's are a modern design and feels relaxing to use. Nice to look at, nice to use.

- Lots of plugins

- This is how cPanel should look.

- Ideal cPanel/Plesk replacement for business.

- Cons

- The pricing and plans need their descriptions making better.

- The demo is only for the client's section and not everything is available (i.e. email section is missing).

- Putting together the list of technologies this panel uses was hard.

- Need to sort the VPS/Dedicated pricing. Why should the price be different because of the platfrom when it is already limited by accounts.

- Pricey unless you go for the unlimited plan which is only suitable for business.

Notes

- Sites

- General

- Settings

- Plugins

- File Locations / Repo Locations / Key Locations

- Install

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

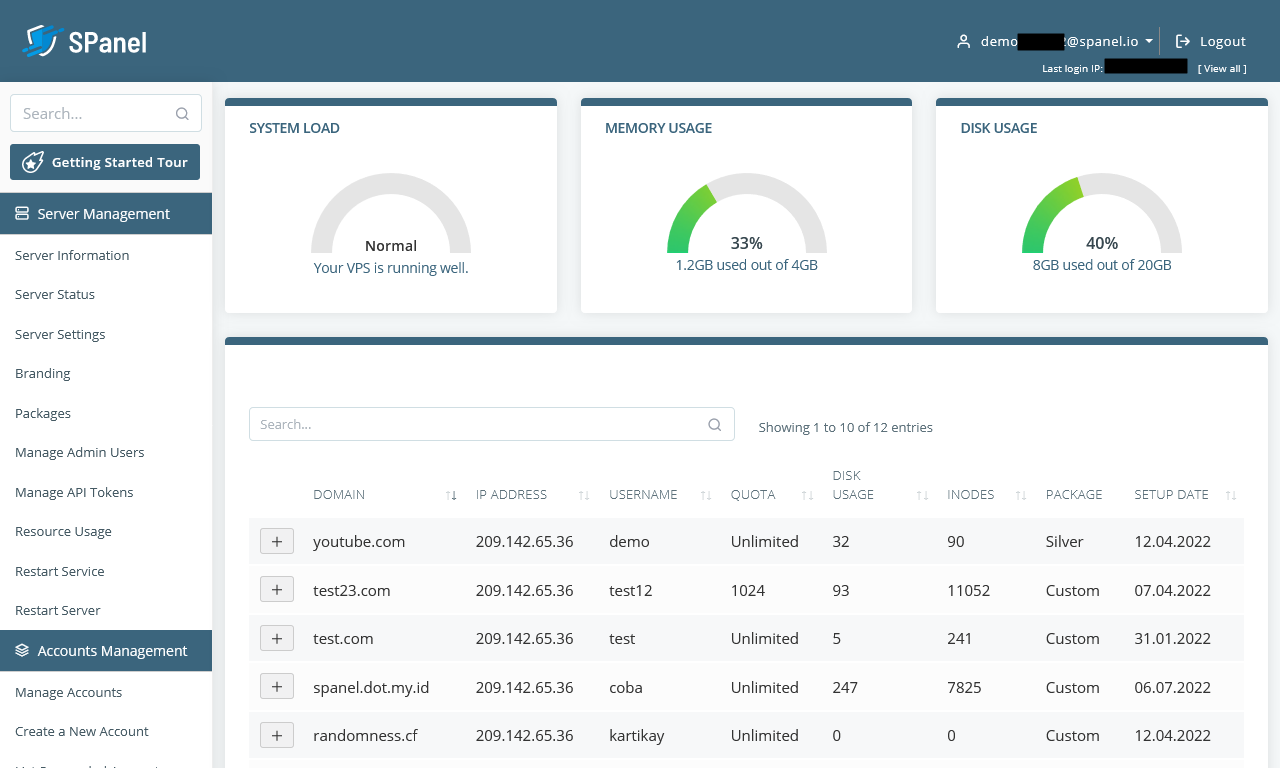

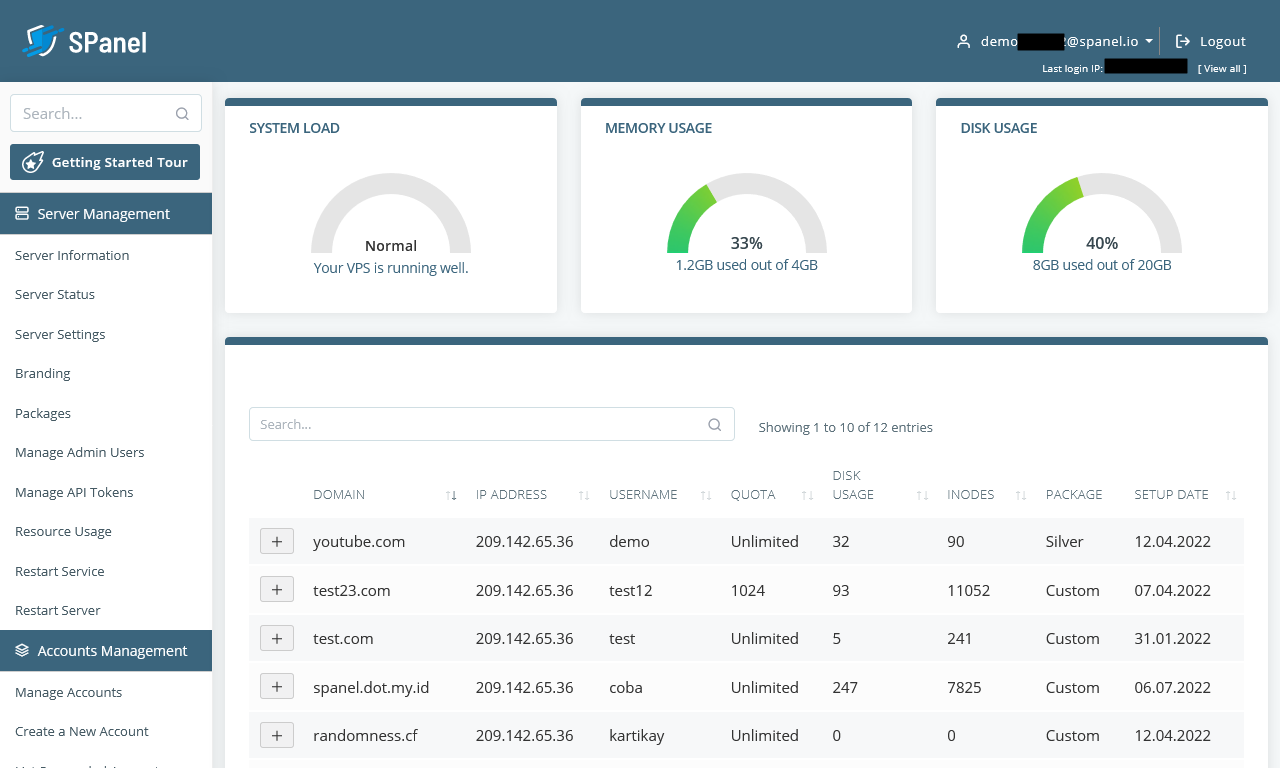

SPanel

| Features |

Status |

| |

|

| Primarily Designed For |

Hosting Company |

| Free/Paid |

Paid |

| License |

Proprietary |

| Supported OS |

Rocky Linux |

| Supported Cloud Providers |

Amazon Web Services (AWS) / DigitalOcean / Hetzner Cloud / Google Compute Engine (GCE) / Google Cloud Platform (GCP) / Microsoft Azure / Vultr / Akamai (formerly Linode) / Alibaba Cloud / Contabo / OVH |

| Install Method(s) |

SaaS |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache / Nginx / OpenLiteSpeed / LiteSpeed Enterprise |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

√ |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND |

| DNS Zone Manager |

√ |

| DNSSEC |

× |

| Multi-PHP |

√ |

| Database Server |

MariaDB |

| Database Admin |

phpMyAdmin |

| Email Server |

Exim / Dovecot |

| Webmail |

Roundcube / RainLoop |

| FTP Server |

Pure-FTPd |

| Caching |

Memcached |

| |

|

| Email Validation |

SPF / DKIM / DMARC |

| Spam Protection |

SpamAssassin |

| Firewall |

CSF |

| WAF |

ModSecurity |

| Virus / Malware Scanning |

SShield |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

√ |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

GoAccess |

| Cron Jobs |

√ |

| Local Backup |

SBackups |

| External Backup |

? |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

× |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

√ |

| Server and Package Updates |

CLI / GUI |

| Automatic Updates |

√ |

| Can be Uninstalled |

× |

SPanel is web hosting control panel developed by Scala Hosting and was developed because of the ever increasing cost of cPanel. The next generation cloud management platform, allowing every website owner to easily manage their server in a secure environment. This panel is an ALL-IN-ONE cloud hosting platform allowing every site owner to host multiple websites on their own fully managed cloud VPS and as such is not designed for your own local servers, you must use a cloud based provider that this software supports.

SShield is the all-in-one security solution of SPanel and is unique because it doesn’t just rely on the existing virus and malware databases. Instead, it follows advanced algorithms for predictive analysis and cyber threat prevention. A predictive AI for detecting and removing cyber threats.

SPanel is available for free with any Scala Hosting VPS plan.

- Pros

- A good cPanel clone with professional support

- SShield

- A feature request site where votes turn into features getting implemented

- Cons

- No demo unless you sign up

- No forum

- Expensive

- The documentation is terrible, there is hardly any information.

Notes

- Sites

- Homepage

- Demo - Request only

- Changelog

- Code Repository

- Forum

- Docs

- Plugins

- General

- Managed = They will remotely dial into your server and fix things

- Self Managed = The software only. This should be renamed software only

- Settings

- Plugins

- File Locations / Repo Locations / Key Locations

- Install

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

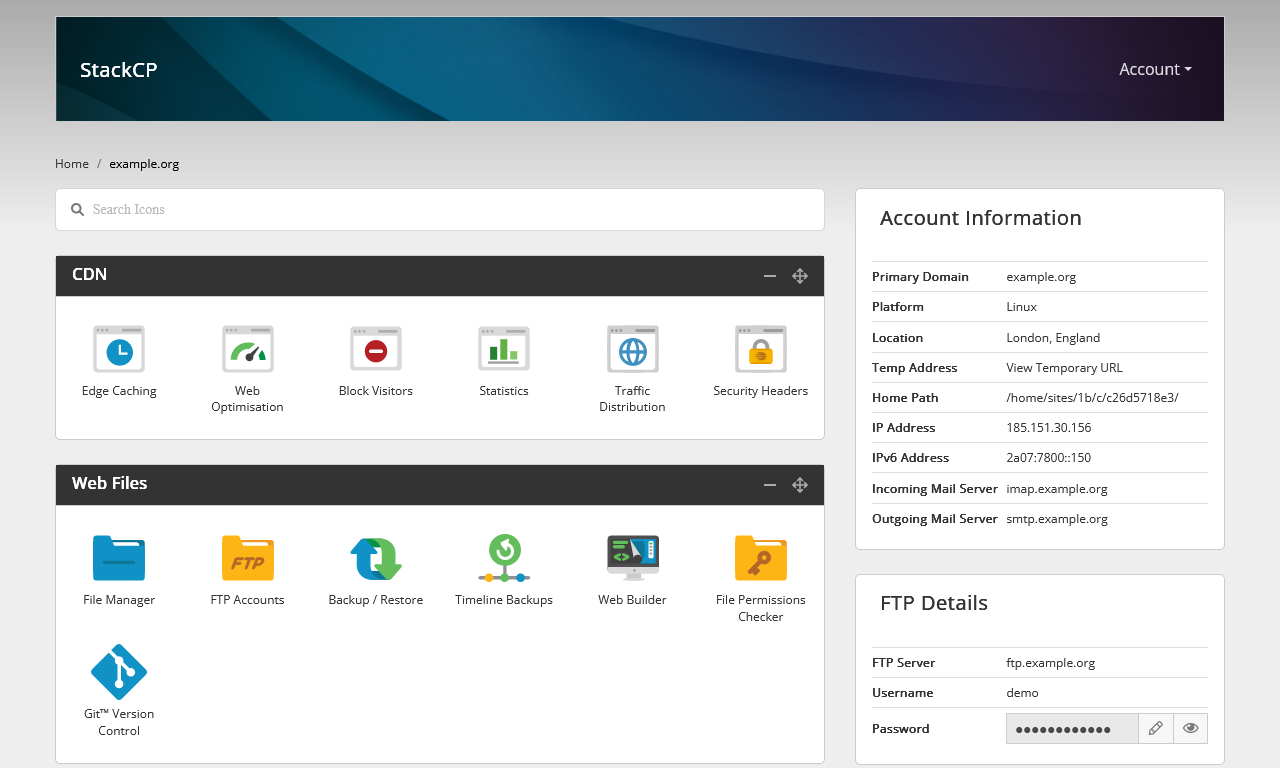

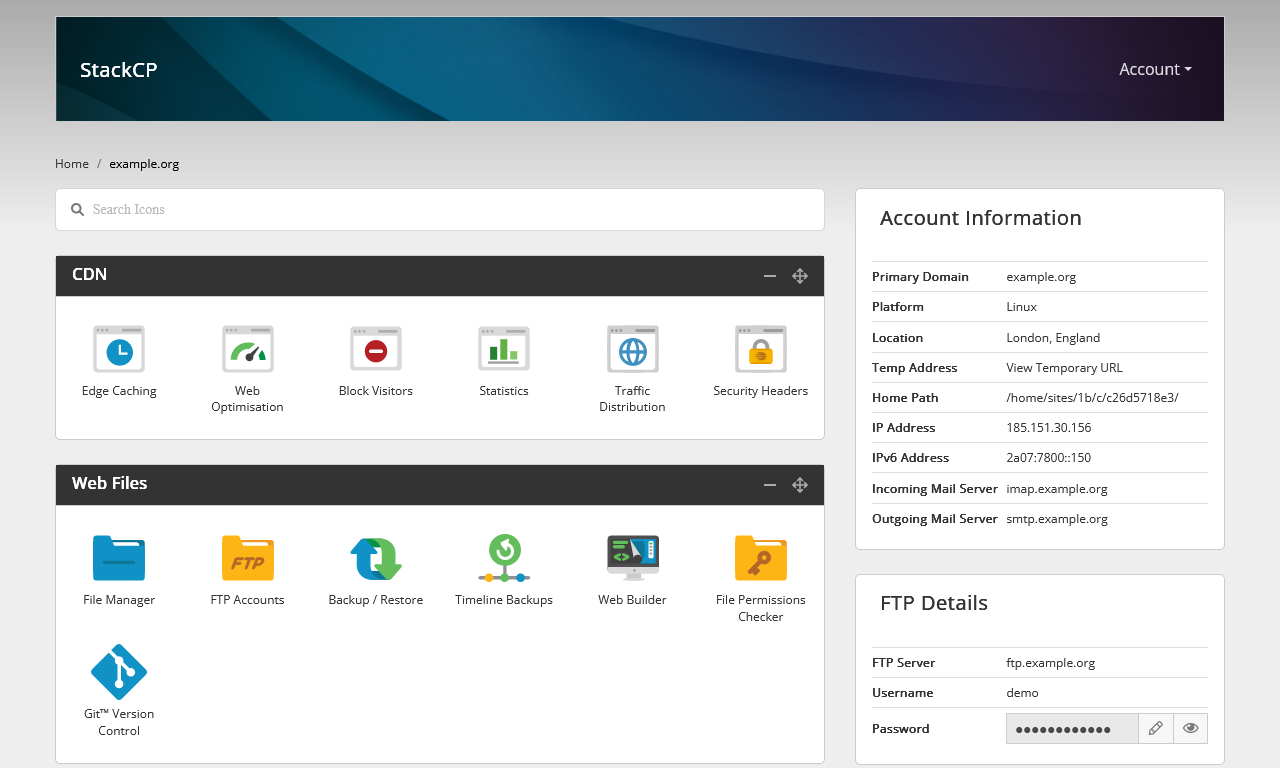

My20i / StackCP

| Features |

Status |

| |

|

| Primarily Designed For |

Hosting Company |

| Free/Paid |

Free (can only be used with 20i.com or their resellers) |

| License |

Apache |

| Supported OS |

CentOS / Windows Server |

| Supported Cloud Providers |

in-house / Amazon Web Services (AWS) / Google Cloud Platform (GCP)

*Reseller hosting platform has been built in-house. AWS and Google Cloud are available as Managed Hosting products.

|

| Install Method(s) |

SaaS |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt |

| DNS Server |

Google DNS |

| DNS Zone Manager |

√ |

| DNSSEC |

√ |

| Multi-PHP |

√ |

| Database Server |

MariaDB / MSSQL (extra cost for license) |

| Database Admin |

phpMyAdmin |

| Email Server |

Exim / Dovecot |

| Webmail |

Roundcube (Stackmail) |

| FTP Server |

ProFTPD |

| Caching |

OPCache / CDN (in-house) / in-house WordPress cache plugin (StackCache) |

| |

|

| Email Validation |

SPF / DKIM |

| Spam Protection |

Rspamd |

| Firewall |

iptables / Firewalld / Voxility / DDOS protection (via Voxility) |

| WAF |

ModSecurity / Brute Force Detection |

| Virus / Malware Scanning |

ClamAV |

| |

|

| Reseller Accounts |

× |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

√ |

| Hosting Packages |

× |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

AWStats / Webalizer |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

√ (only 20i.com can access, disaster recovery only) |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

× |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

× |

| Server and Package Updates |

CLI / GUI |

| Automatic Updates |

√ |

| Can be Uninstalled |

× |

My20i and StackCP form a hosting platform from 20i.com and is a direct rival to cPanel except that you need to take out hjosting from 20i.com to use this panel, and best of all it is free with no account limits.

20i.com develops My20i amd StackCP in-house which their resellers can use for free. They refer to their platform as My20i.

The free version works ....................

- Pros

- You change all of the login domains (my20i.com, stackcp.com, stackmail.com) to those of your choosing with CNAMEs if you require.

- All support is based in the UK

- The SaaS hosting model is truley unlimited.

- Hostshop the in-house billing software is free.

- Reseller Hosting comes with a free WHMCS module and get can a discount on their WHMCS license.

- High load sites will be hived off to a fast server when a peak load occurs.

- Free migrations

- Free CDN

- No restrictions on accounts, bandwidth, space with the following exceptions:

- MySQL dB max size 1024mb (might be overall or a single DB, not sure)

- Cannot use more than 10% of the 20i.com server resources

- Email mailboxes are max 10GB

- What limits are place on the individual user accounts? Is there a link to the specs ie: indoes, processor, RAM, entry points….

- With regards to your query, there are not any inode limits in place and resources are scaled based on demand.

- when a website receives a large number of hits it is quickly isolated and moved to it’s own dedicated backend - this is raw hardware with no overhead at all - Multiple, 48 cores machines for the site that is busy. Power that would quite literally costs hundreds per month to buy for a site otherwise. Once the “busy period” is over, your site is moved back to the normal infrastructure.

- 1TBps DDOS protection (via Voxility).

- Documentation is excellent.

- Cons

- You have to use 20i.com hosting to use this panel (but the hosting is actually good)

- MSSQL = additional £10.00 /pm (but this is for the license so not 20i.com fault)

- 20i.com only does wildcard LetsEncrypt certificates for each account which means the platform does not support A record forwarding from remote DNS servers. This is because LetsEncrypt requires that the requester has full control over their DNS (i.e. their nameservers point to 20i.com servers).

Notes

- Sites

- General

- Email

- SSL (LetsEncrypt)

- How to auto-activate SSLs for websites | 20i

- With this feature you, as a hosting reseller, can provide an extra service to your customers. With SSL certificates being installed automatically your customer has not to worry about this security feature for the website.

- Please note that for 20i Resellers, this option is turned on by default.

- Can I use the free SSL if my site doesn’t use the 20i nameservers? | 20i

- Let’s Encrypt require the authoritative nameservers are set to our own to issue their Wildcard Certificates under the ACME DNS verification method - That is not something we control.

- this means you can only install the free SSL if the nameservers of the website point to 20 and not external point A records to the 20i platform and get SSL certificates automatically installed from LetsEncrypt.

- Q:

- We have a lot of accounts that only have the A records pointed to our server, cPanel manges ok. How can this be got around as this is a very common practice?

- Can you not revert to using SAN? Perhaps share this as a feature request?

- A: ??? No solutution yet.

- Does Let’s Encrypt issue wildcard certificates? | Let's Encrypt

- Questions to Sales Rep

- Fair usage explained

- There is no fair usage policy for number of hosting accounts, space and bandwidth but there is a max size of 1024mb/1GB for a database.

- is .htaccess supported?

- Yes .htaccess is supported

- is mail() allowed?

- Is deactivate same as suspend (i.e. for 50 days then it is terminated)?

- Yes this is the same as suspended where the website is not accessible via the internet but the data and web space are still in your 20i account.

- Can we do remote backups of our client accounts to Wasabi/ASW S3?

- We do not have a feature where you can remotely store backups. However, you can take a back up of all your websites and databases using the backup/restore tool.

- You can do a bulk backup with this and this will create a .zip file for you to download.

- You will also have access to FTP for individual hosting control panels and Master FTP for all websites and remote MySQL for individual control panels.

- Do you get choice of Linux or Windows on which My20i/StackCP sits on?

- Yes, when you create a webspace for a domain, you will be given the option to host on Linux, WordPress or Windows.

- Can we move away if needed ie. cPanel compatible transfer sort of thing?

- You can move away if you need to do so, however we do not have an automated transfer out tool available.

- You can download a copy of your website files and database into a zip file and then upload the data into your new host.

- Do you offer an API (i.e. cPanel/WHM compatible) that external/alternative hosting providers can use to transfer out my accounts from your servers to theirs?

- We unfortunately don't have a API or other kind of tool that can be used to migrate hosting packages off of our Platform I'm afraid. Apologies about that.

- Q: So once we are on your servers, we are stuck unless we want move everything manually?

- A: I'm afraid you would have to manually backup the sites in order to move off our platform yes.

- Are StackCP.com and www.20i.com only for 20i.com and their resellers?

- Yes, stackcp.com and my.20i.com are only available for Resellers and 20i.com

- Do we get access to a command line (CLI), jailed or otherwise?

- Settings

- Plugins

- File Locations / Repo Locations / Key Locations

- Install

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

Small Hosting Company

These panels can be used for selling hosting but are aimed towards small hosting companies becasue they generally need more admin input to keep going or do not have all of the required features as the ones above. Hobbyists or techies running can use these to run their own servers from home.

KeyHelp

| Features |

Status |

| |

|

| Primarily Designed For |

Small Hosting Company |

| Free/Paid |

Both |

| License |

Proprietary |

| Supported OS |

Debian / Ubuntu |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND |

| DNS Zone Manager |

√ |

| DNSSEC |

× |

| Multi-PHP |

√ |

| Database Server |

MySQL / MariaDB |

| Database Admin |

phpMyAdmin |

| Email Server |

Postfix / Dovecot |

| Webmail |

Roundcube / RainLoop / SnappyMail |

| FTP Server |

ProFTPD |

| Caching |

× |

| |

|

| Email Validation |

SPF / DKIM / DMARC |

| Spam Protection |

SpamAssassin / Amavis / Greylisting (in Debian version only) |

| Firewall |

iptables |

| WAF |

Fail2Ban |

| Virus / Malware Scanning |

ClamAV |

| |

|

| Reseller Accounts |

× |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

× |

| Hosting Packages |

× - Limits are set by the admin on the User's account |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

AWStats |

| Cron Jobs |

√ |

| Local Backup |

Backups with Snapshots feature

|

| External Backup |

FTP / SFTP / WebDAV / KeyDisc / Dropbox / Custom with Restic / Rclone

|

| File Manager |

√ |

| |

|

| Extendable by Plugins |

× |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

× |

| Server and Package Updates |

GUI |

| Automatic Updates |

√ |

| Can be Uninstalled |

× |

KeyHelp is a Control Panel for Users and Server Administrators. Regardless of whether you are a user of a web hosting plan or an administrator of a server – an easy-to-use management interface with many functions and options is appreciated by everyone equally. The Server Hosting Panel KeyHelp is aimed at administrators, resellers and users. The KeyHelp server hosting panel is developed by the Thuringian (German) hosting provider Keyweb and made available to the public free of charge.

The free version is not crippled and the UI is ultra modern and responsive. If you plan to use this to sell web hosting be aware that it does not have a reseller component and you realistically will need to buy the Pro version for those little extras it gives you. Great for the inexperienced Linux convert who wants to do web hosting.

This responsive and stylish panel is not as mature as cPanel but has a passionate team behind it's development and is one to watch. This should be in your shortlist becasue of how easy it is to use and setup.

- Pros

- The client panel is very nice.

- Can Export/Import server settings

- Linux knowledge will be required for some features and maintenance.

- There is an easy login link to phpMyAdmin

- 2FA is available

- Specialist SSH connection for KeyHelp staff (enabled by default) similair to Divi's Help system.

- Settings --> Configuration --> Miscellaneous --> Support Access

- Active community (German and English)

- Developed in Germany.

- Service Monitor (via port monitoring)

- Allows remote database access

- Clients can change their PHP version

- Available in 19 different languages.

- Easy to swap/add additional versions of PHP

- Can import and export KeyHelp settings

- Backup can support many different endpoints using Restic and Rclone

- For business, the paid plan is very acceptable.

- Cons

- Doesn't not work behind a NAT Router

- Linux knowledge is required for some tasks.

- No CSF firewall

- There is a user workaround below, but it is not official.

- No GUI option to install ModSec

- Can not advance tune Apache in the GUI.

- No Reseller accounts

- Resources and permissions are set per user, there are no packages.

- No DNSSEC

- Cannot change the Panel's port, but it is a planned feature.

- SPAM control limited

- No GUI to edit PHP.ini

- No Client GUI to control PHP settings

- No Package manager

- File Manager has some limitations including not being able to zip up the root www directory.

- No root filemanager (this was mentioned as security measure)

- No Terminal in the GUI

- No Integrated phpMyAdmin Session (Developer says he choose not do do this by design)

- My Wishlist

Notes

- Sites

- General

- Settings

- Changing a user's PHP settings

- The are controled by editing the users profile in the admin panel.

- post_max_size and upload_max_filesize - KeyHelp Community

- If you change the template afterwards it does not affect existing customers. You must change the user php settings.

- or re-apply the account template in the user management of the corresponding users.

- Email

- Email functionality is not enabled immediately, maybe dependent on a CRON?

- Forwarding email without a mailbox

- Features are missing in the client area

- Edit their resoucres in the admin area. If there is a 0, this means none rather than the usual unlimited.

- API

- Database

- By default Database names and usernames are automaitcally generated but this can be turned off:

- Settings --> Configuration --> System --> Database Server --> Rules for assignment of database / usernames

- Session Timeout

- Configuration --> Security --> Login & Session --> Session idle time:

- Default = 24mins

- php.ini

- This has to be set manually unless the settings in the 'user reources'/'Account Templates' is enough

- Allow the use of NAT network (workaround 1 - tested)

- Edit the network interface and add your external IP as an alias to the real adapter (not the loopback).

sudo nano /etc/network/interfaces

- Identify your network card's ID, in my case eth0

- Add the following code after your network card's definition making sure you update the eth0:0 to match your card (eth0 = your network card, :0 = alias definition.), and update the external IP to match the one you want.

#Secondary IP Address

auto eth0:0

iface eth0:0 inet static

address 31.31.31.31

netmask 255.255.255.255

- Retart the network service

sudo systemctl restart networking

- Goto KeyHelp --> Settings --> Configuration --> System --> IP Addresses

- You now can see your local IP ticked, and your external IP is present but unticked. Swap them over and save.

- KeyHelp --> Miscellaneous --> Bulk Operations --> Rewriting user configuration files

- You only need to redo 'DNS server configurations'

- This will add a job to the system to run when the systen CROn is next triggered (usually every minute)

- All your domains should now have there A records updated to your external IP address.

- Edit the network interface and remove the alias.

- Retart the network service

sudo systemctl restart networking

- Done

- All your DNS records should now have your external IP for their A record.

- As long as the external IP (in ...System --> IP Addresses) stays selected it will remain as it is stored in the database.

- If this workaround is removed upon an update, repeat the procedure and re-apply.

- I think this should be permanent but I cannot gurantee what is changed during an update, but I am sure it can be re-applied.

- The IP addresses that you are changing takes a minute or two to update.

- alias - How do I add an additional IP address to an interface in Ubuntu 14 - Ask Ubuntu - This will work for Debian as well.

- Allow the use of NAT network (workaround 2 - untested)

- Goto KeyHelp --> Settings --> Configuration --> System --> IP Addresses

- Edit the DOM and change the local IP to the external IP and then submit.

- This might take a bit of fiddling to get write, but you get the idea

- Plugins

- Rspamd (Fast, free and open-source spam filtering system.)

- General --> System Status --> Email --> Rspamd Web Interface

- Official Rspamd documentation

- Filtering out spam with rspamd workaround.org - You have come a long way in this guide and your mail server is already fully functional. Now it's time to deal with the dark side: spam.

- File Locations / Repo Locations / Key Locations

- Configuration Files & Storage Paths - KeyHelp Knowledge Base

- Panel, phpMyAdmin, webmail and other web apps

/home/keyhelp/www/

- The .htpasswd created by KeyHelp, is stored at:

/etc/apache2/keyhelp/htpasswd/.htpasswd_superadmin_xxxxx52984ec401ebedf74286a243e4e

- Settings, Templates and Domain zones backup - KeyHelp Community

- KeyHelp stores all mentioned items in its database, which is always part of a backup (server backup).

- Additionally, the KeyHelp related databases (`keyhelp`, `roundcube`, `rainloop`, `phpmyadmin`) are backed up daily to /home/keyhelp/keyhelp.backup/dumps/.

- Apache Configs

/etc/apache2/keyhelp/

This has invisible redirects as shown in this example

# Rspamd

RewriteEngine on

RewriteRule ^/rspamd$ /rspamd/ [R]

ProxyPreserveHost On

ProxyPass /rspamd http://localhost:11334/

ProxyPassReverse /rspamd http://localhost:11334/

- phpMyAdmin

- /home/keyhelp/www/phpmyadmin/config.inc.php

- Changes to phpMyAdmin confic will get wiped out upon KeyHelp updates

- Apps are all access from the root accounts domain even if instigated from the client account, ie:

- Install

- After you have created a user's account and domain, you need to go back into the account and correctly configure the resources it is allowed.

- OS upgrades are taken care of by custom scripts the KeyHelp team write.

- No GUI to add and remove PHP extensions, this has to be done by apt?

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

- Backup

- Ubuntu vs Debian

- KeyHelp adds new Debian version first when they depned on the underlying technology and package avaiablily, i.e. the new SPAM system.

- Ubuntu is always 6 months behind Debain updates

- Ubuntu or Debian? Which One is good for KeyHelp? - KeyHelp Community

- Ubuntu isn't as good as Debian as it's not always free of upgrade/update bugs.

- My advise, Servers is Debian, no question about it.

- Also Debian has a far longer lifespan in support then any other distro.

- Data Collector Script for Community Support - KeyHelp Community - Jolinar along with some community members has developed a shell script that gathers essential system information on a KeyHelp Server and provides it in a text file. This allows them to offer proper support in the forum without having to painstakingly request and compile all the necessary information each time.

Froxlor

| Features |

Status |

| |

|

| Primarily Designed For |

Small Hosting Company |

| Free/Paid |

Free |

| License |

GPLv2 |

| Supported OS |

Debian / Ubuntu |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

× |

| Web Server |

Apache / NGINX / LigHTTPd (for backend) |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND / PowerDNS |

| DNS Zone Manager |

√ |

| DNSSEC |

× |

| Multi-PHP |

√ |

| Database Server |

MariaDB |

| Database Admin |

× |

| Email Server |

Postfix / Dovecot |

| Webmail |

× |

| FTP Server |

Pure-FTPd |

| Caching |

OPcache |

| |

|

| Email Validation |

SPF / DKIM |

| Spam Protection |

× |

| Firewall |

× |

| WAF |

× |

| Virus / Malware Scanning |

× |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

× |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

√ |

| Cron Jobs |

√ |

| Local Backup |

× |

| External Backup |

× |

| File Manager |

× |

| |

|

| Extendable by Plugins |

× |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

× |

| Server and Package Updates |

CLI |

| Automatic Updates |

× |

| Can be Uninstalled |

√ |

Froxlor is the lightweight server management software for your needs. Developed by experienced server administrators, this open source (GPL) panel simplifies the effort of managing your hosting platform. Froxlor is ideal for a hobbiest due to the fact a lot of stuff needs to be setup manually and requires knowledge of Linux but also has no dedicated reseller system. This system calls resellers, admins, and customers can be assigned to them. Developed by experienced server administrators, this open source (GPL) panel simplifies the effort of managing your hosting platform.

The panel will generate scripts for you for some actions and you will need to run these as root in the terminal manually, but this is not hard, just an extra step. Froxlor will need to have all of its settings checked upon first installation but this task is not hard and only needs to be done once.

- Pros

- The GUI is really modern and clean and is easy to navigate around.

- OPcache is setup from the start with a nice statistics page showing you various metrics.

- There is an active community and developers working on this project. I found it very easy to setup and use.

- Froxlor could be used with a more in-depth apache config panel such as Cockpit or other webservers panel as it reads all settings from the config files.

- Can be uninstalled.

- Uses the raw config files on the system rather than it's own.

- Cons

- Linux experience needed for some features and changes.

- You will need to use the command line to do certain tasks.

- phpMyAdmin is not installed.

- You will need Linux knowledge becasue not everything is performed through the panel.

- Very Stable

Notes

- Sites

- General

- Admin / Resellers

- Admins / Resellers | Froxlor Documentation

- Admin and reseller accounts in froxlor are administrative users. This means they can not have a website, email-accounts or similar for themselves. Both of the administrative users are only separated by permissions given from the parent administrative user. So an admin with fewer permissions might be considered a reseller

- Not all settings are exposed in the panel

- Changes can take up to 5 minutes when the cron is run

- it has a cron system

- PHP settings are stored in froxlor and then i guess merged opn to the real php.ini which is probably a good thing, allows settings to survive updates.

- Supports perl

- The Froxlor frontend itself uses the Froxlor API backend too.

- Froxlor is the fork of Syscp.

- Froxlor has been going since at least 2012 which means it is very stable.

- Settings

- Change the panels port

- Resources --> IPs and Ports

- Edit both the 80 and 443 as follows

- 80 --> 2082 + make sure 'Create Listen statement' is on, change nothing else

- 443 --> 2083 + make sure 'Create Listen statement' is on, change nothing else

- Add a new on on mapping

- Port 80 and your server IP. this will get rid off the ubunt/apachge default landing page

- Custom docroot = ? | If this is not set then Froxlor will load once again on port 80

- Wait up to 5 minutes for the changes to be applied

- You should replace this file (located at /var/www/html/index.html) before continuing to operate your HTTP server. when you remove the froxlor of port 80, you need to replace this

- Force Froxlor control panel to be HTTPS

- System --> Settings --> Froxlor VirtualHost settings --> Enable SSL-redirect for the froxlor vhost

- Reset admin password

SSH to your server

root@froxlor:~# mysql -u root -p

Enter in the SQL root password (should be the same as your `froxroot`)

MariaDB [(none)]> USE froxlor;

MariaDB [(froxlor)]> UPDATE `panel_admins` SET `password` = MD5('my-secret-password') WHERE `adminid` = '1';

or

MariaDB [(froxlor)]> UPDATE `panel_admins` SET `password` = MD5('my-secret-password') WHERE `loginname` = 'admin';

Query OK, 1 row affected (0.020 sec)

Rows matched: 1 Changed: 1 Warnings: 0

Done

- Plugins

- File Locations / Repo Locations / Key Locations

- Froxlor control panel files: /var/www/html/froxlor/

- Default Skeleton File: /var/www/html/froxlor/templates/misc/standardcustomer/index.html

- Customer data: /var/customers/

- Add your own template in Froxlor

- Install

- Update / Upgrade

- Uninstall

- Uninstall Froxlor - General Discussion - Froxlor Forum

- Uninstall tutorial? What more then "rm -rf /var/www/froxlor/" and possibly the auto-generated vhost-configs in the corresponding directory do you need? Froxlor does not bind itself deep to the system like others...there's not more to it then just remove our files (and database if you like, doesnt matter).

- Installation Instructions

- Always use your external IP when setting Froxlor up

- These are my notes to help me quickly install. However the Froxlor installation notes are excellent and this section might get removed.

- Login as root

- Run these commands

apt-get -y install apt-transport-https lsb-release ca-certificates gnupg

curl -sSLo /usr/share/keyrings/deb.froxlor.org-froxlor.gpg https://deb.froxlor.org/froxlor.gpg

sh -c 'echo "deb [signed-by=/usr/share/keyrings/deb.froxlor.org-froxlor.gpg] https://deb.froxlor.org/ubuntu $(lsb_release -sc) main" > /etc/apt/sources.list.d/froxlor.list'

apt-get update && apt-get upgrade

apt-get install froxlor

- Which services should be restarted?

- General mail configuration type:

- Option 2 if you don't know

- Enter the MySQL daemon

mysql -u root

- Run these MySQL commands to create a privileged user. Swap CHANGEM3 for a password of your choice.

CREATE USER 'froxroot'@'localhost' IDENTIFIED BY 'CHANGEM3';

GRANT ALL PRIVILEGES ON *.* TO 'froxroot'@'localhost' WITH GRANT OPTION;

FLUSH PRIVILEGES;

EXIT;

- Run these commands

- Run this command (this prevents a PHP module issue in the WebInstaller)

service apache2 restart

- Now go to the webinstaller:

http://{your-ip-address}/froxlor

- Follow the wizard

- Done

- Misc

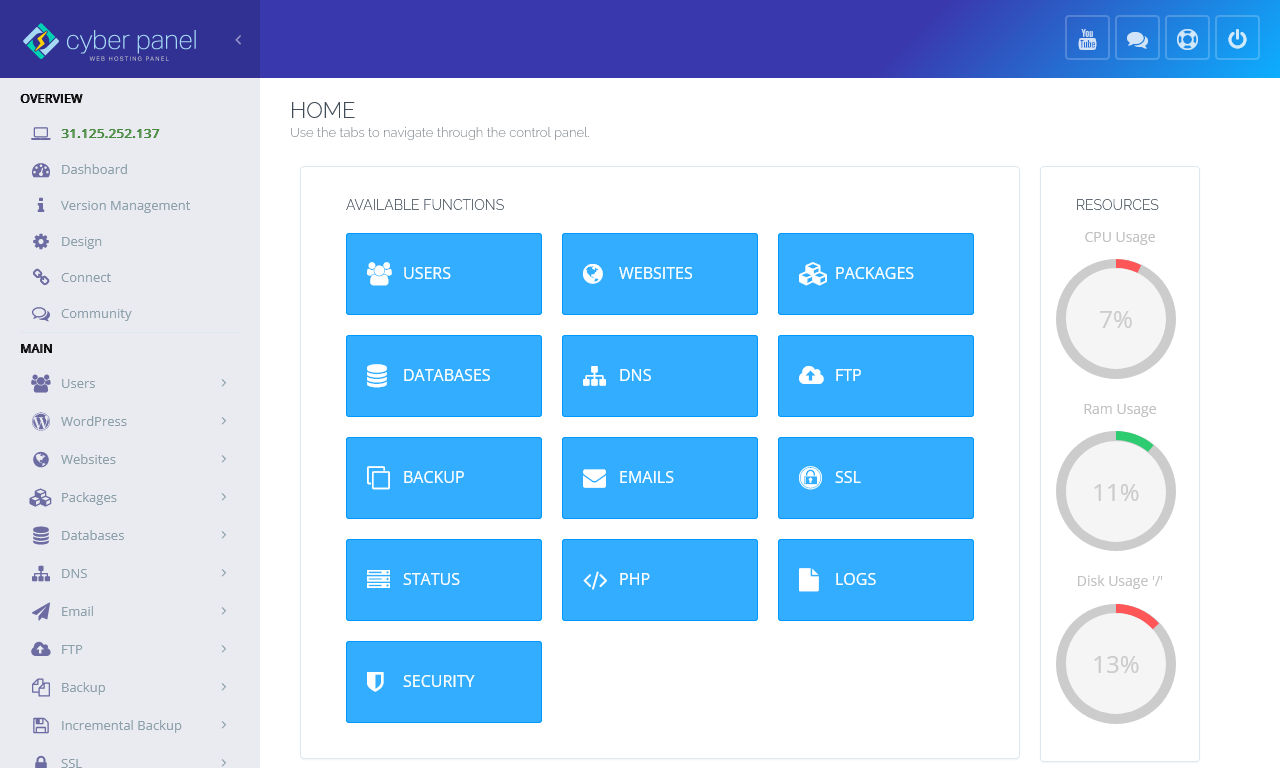

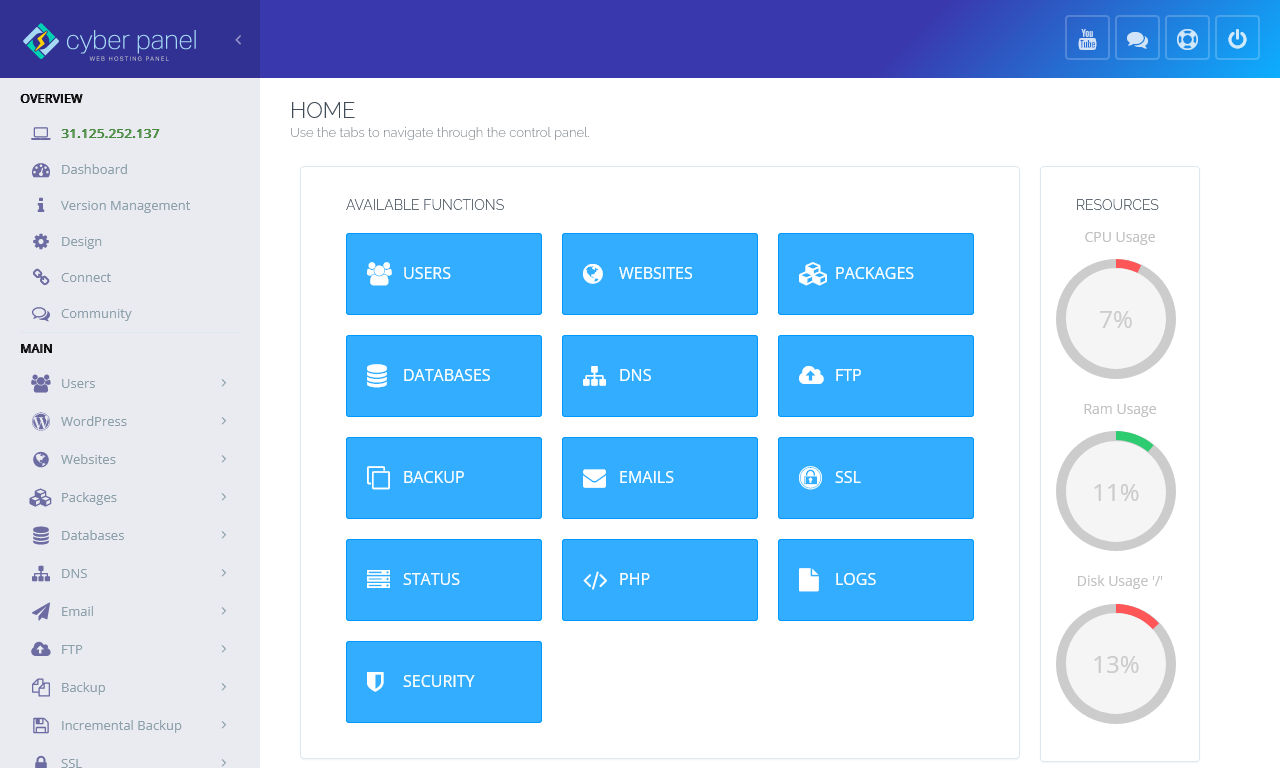

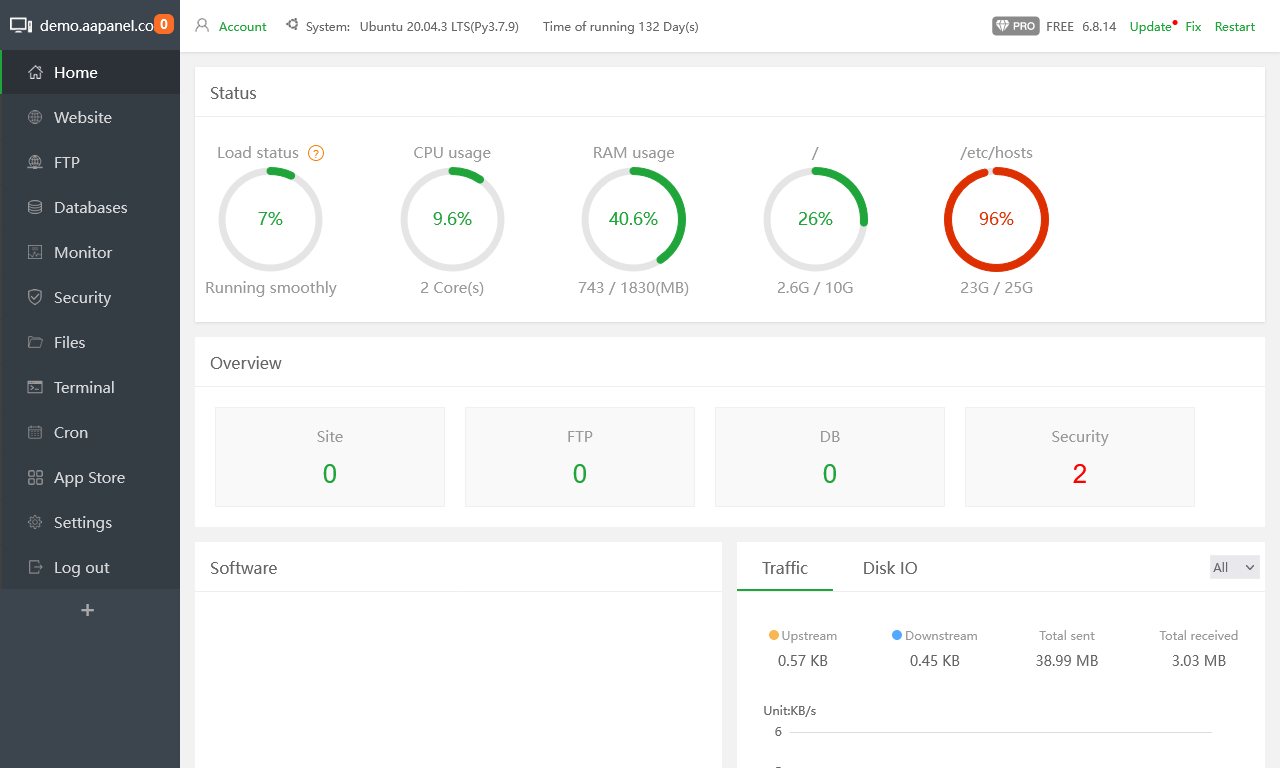

CyberPanel

| Features |

Status |

| |

|

| Primarily Designed For |

Small Hosting Company |

| Free/Paid |

Both |

| License |

Proprietary / GPLv3 |

| Supported OS |

AlmaLinux / CentOS / Ubuntu |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

Docker |

| Web Server |

OpenLiteSpeed / LiteSpeed Enterprise |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

√ |

| AutoSSL |

LetsEncrypt |

| DNS Server |

PowerDNS |

| DNS Zone Manager |

√ |

| DNSSEC |

× |

| Multi-PHP |

√ |

| Database Server |

MariaDB |

| Database Admin |

phpMyAdmin |

| Email Server |

Postfix / Dovecot |

| Webmail |

SnappyMail |

| FTP Server |

Pure-FTPd |

| Caching |

Memcached / Redis / LiteSpeed Cache (LSCache) |

| |

|

| Email Validation |

SPF / DKIM |

| Spam Protection |

SpamAssassin / MailScanner / Rspamd (Paid Addon) |

| Firewall |

iptables / CSF / Firewalld |

| WAF |

ModSecurity / OWASP |

| Virus / Malware Scanning |

ClamAV / MailScanner / ImunifyAV / Imunify360 |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

× |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

× |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

SFTP / AWS S3 |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

√ |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

× |

| Server and Package Updates |

CLI / GUI |

| Automatic Updates |

× |

| Can be Uninstalled |

× |

CyberPanel is web hosting control panel which is based on OpenLitespeed. Comes with built-in support for DNS, FTP, Email, File Manager and automatic SSL.

The free version works well but the webserver is crippled so could not be used for professional webhosting. Ideal for running your multiple website on a budget. LiteSpeed Enterprise is a powerful webserver and is ideally suited for professional webhosting but it does come at a cost. OpenLightSpeed does not support all Apache .htaccess commands where as LiteSpeed Enterprise as is a complete Apache drop-in replacement and does.

To use the full power of this panel it can get quite expensive and the free version does have some other crippled stuff like no root file manager. A one time payment for all the addons is not too bad and if you like this panel I encourage you to purchase as a one time payment rather that pay every month. I do not know if this covers all future versions of the panel and plugins.

CyberPanel with OpenLiteSpeed allows you to host unlimited domains at no cost. However, with LiteSpeed Enterprise you can host 1 domain for free, for further domains/details visit the pricing page.

- Pros

- Uses OpenLitespeed/LightSpeed Enterprise Web Server.

- Docker manager.

- Can be extended by plugins.

- HTTP/3 and QUIC support.

- LiteSpeed introduces for end users is the LSCache module. This allows users to enable the LSCache plugins on a number of content management systems such as WordPress, Joomla, and Magento.

- Cons

- Linux experience needed for some features and changes.

- Free version is crippled and the paid version can get expensive.

- Free version does not have a root file manager

Notes

- Sites

- General

- Settings

- Plugins

- File Locations / Repo Locations / Key Locations

- panel files: /usr/local/CyberCP/

- Install

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

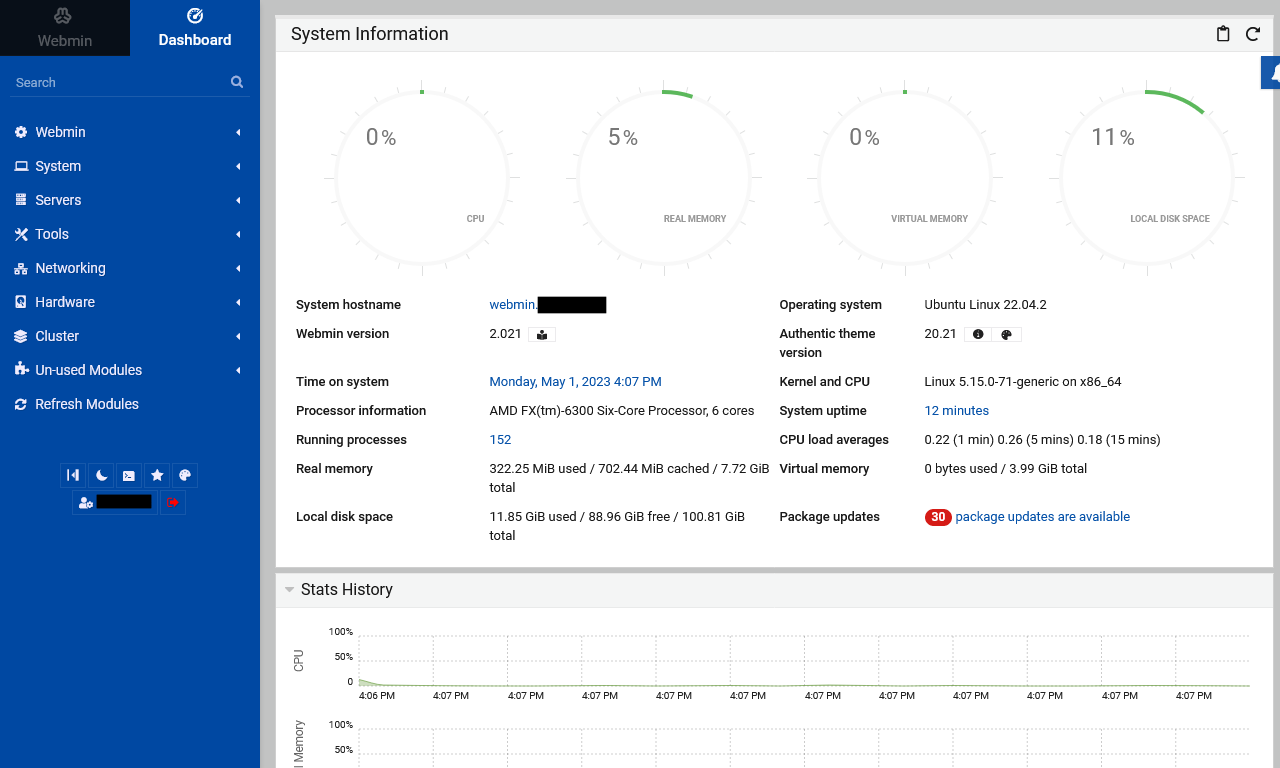

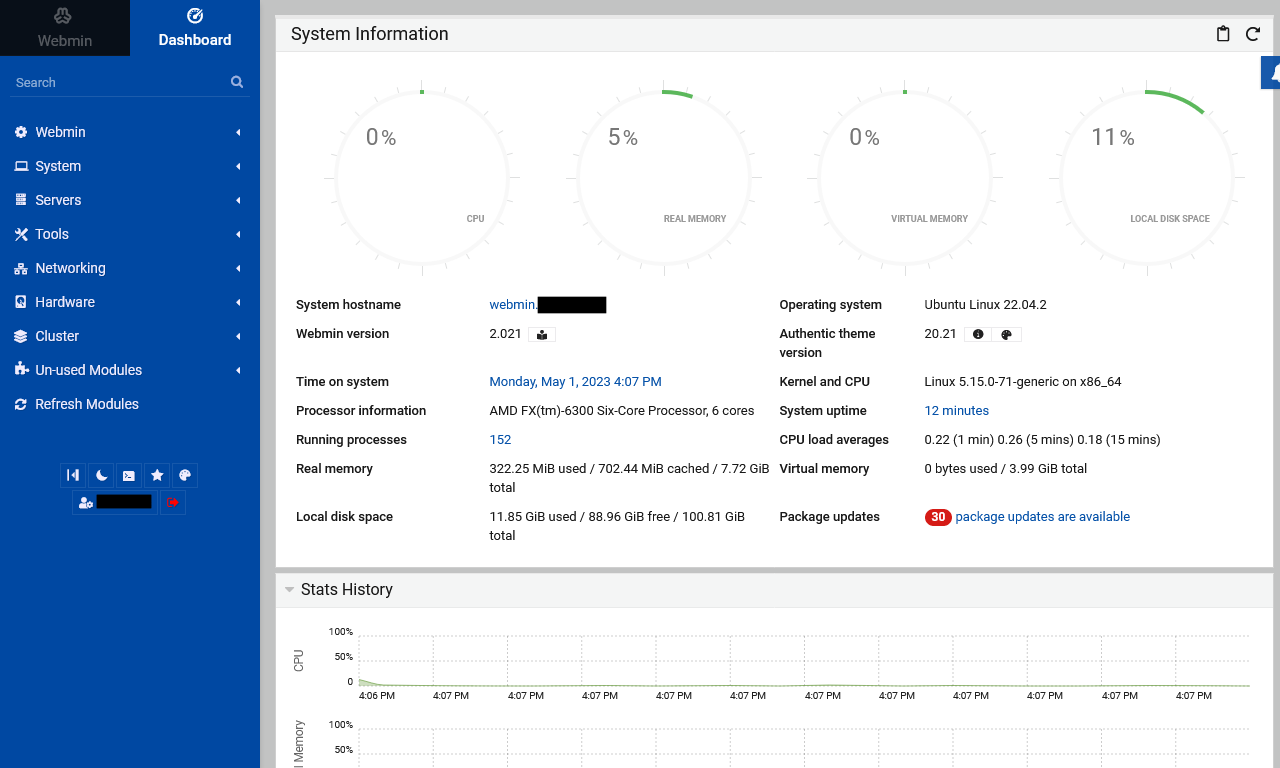

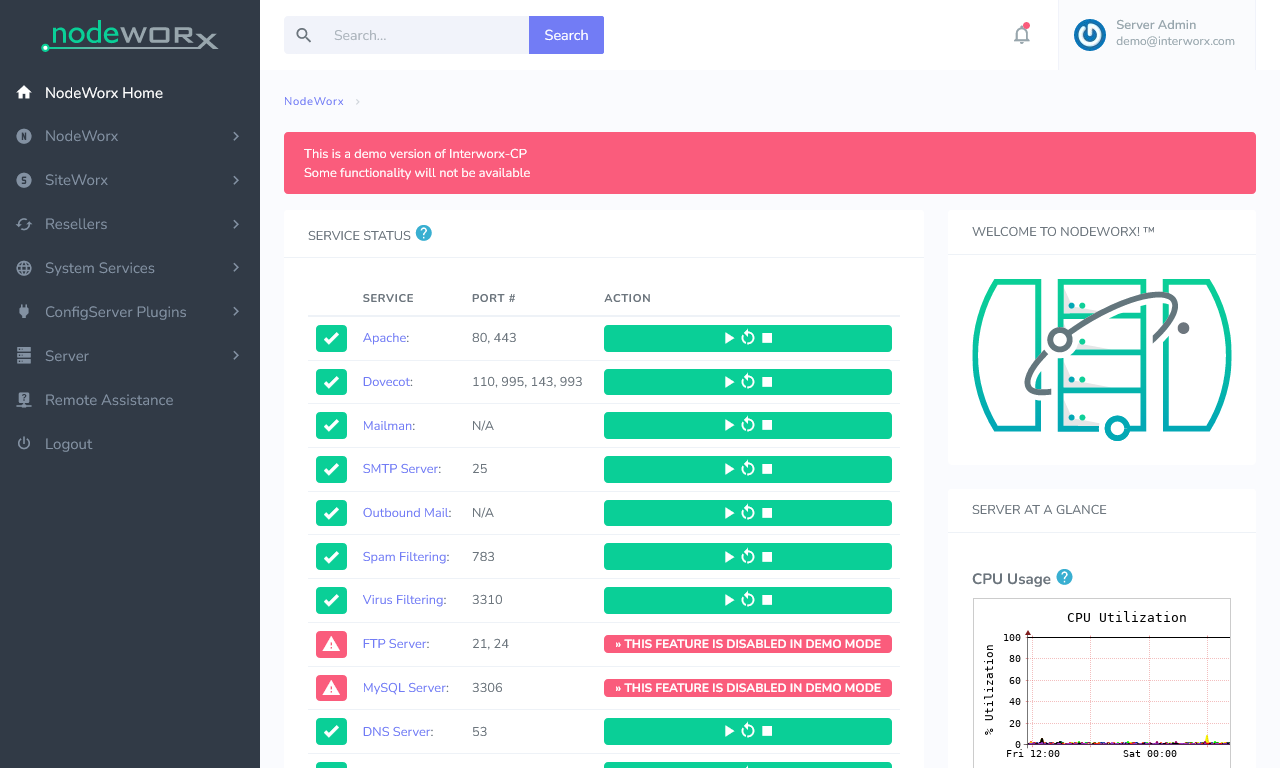

Virtualmin

| Features |

Status |

| |

|

| Primarily Designed For |

Small Hosting Company |

| Free/Paid |

Both |

| License |

Virtualmin Professional / GPLv3 |

| Supported OS |

RHEL / AlmaLinux / Rocky Linux / Oracle Linux / CentOS / Debian / Ubuntu |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

Xen / KVM / OpenVZ / Vservers / Amazon EC2 / Solaris Zones / Google Compute Engine (GCE) / Docker (limited functionality)

Via Cloudmin (Free/Pro)

|

| Web Server |

Apache / Nginx |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND |

| DNS Zone Manager |

√ |

| DNSSEC |

√ |

| Multi-PHP |

√ |

| Database Server |

MariaDB / PostgreSQL / SQLite |

| Database Admin |

phpMyAdmin / phpPgAdmin |

| Email Server |

Postfix / Dovecot / QMail / Sendmail |

| Webmail |

Usermin / Roundcube / SquirrelMail |

| FTP Server |

ProFTPD / WU-FTP / VsFTPd |

| Caching |

? |

| |

|

| Email Validation |

SPF / DKIM / DMARC / DANE (TLSA) |

| Spam Protection |

SpamAssassin / Greylisting |

| Firewall |

CSF / Linux Firewall / Shorewall / Firewalld |

| WAF |

Fail2Ban / Comodo WAF (CWAF) |

| Virus / Malware Scanning |

ClamAV |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

√ |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

Webalizer |

| Cron Jobs |

√ |

| Local Backup |

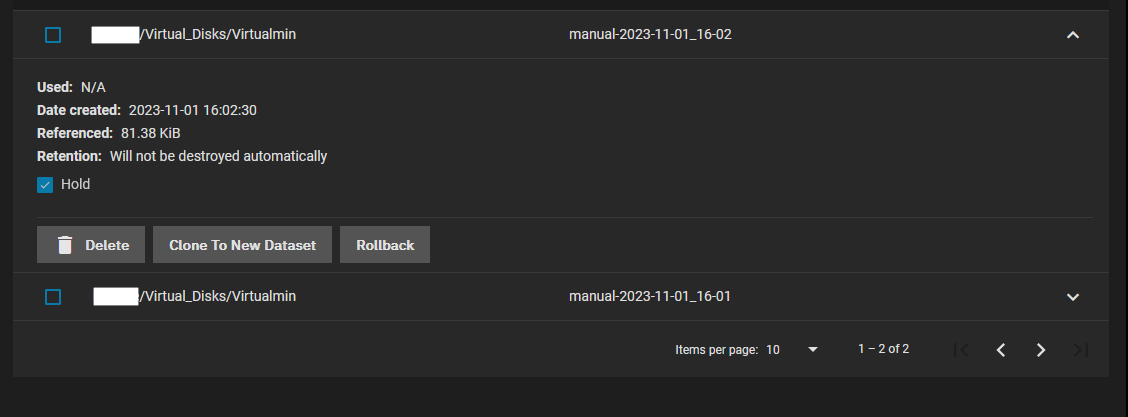

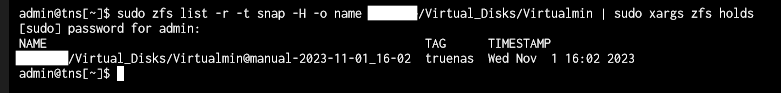

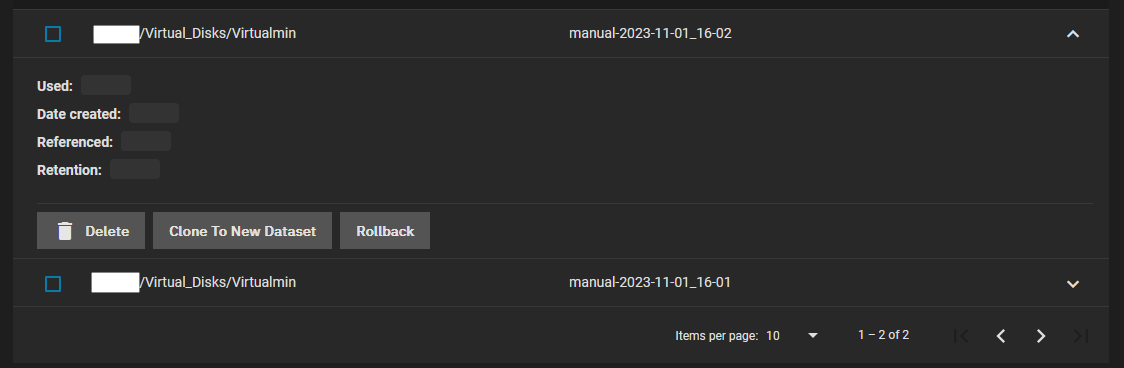

√ |

| External Backup |

FTP / SSH / AWS S3 / Dropbox (Pro only) / Azure Blob Storage (Pro only) / Google Cloud Platform (GCP) (Pro only) / Backblaze (Pro only) / Rackspace Cloud Files / Bacula |

| File Manager |

√ |

| |

|

| Extendable by Plugins |

√ |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

Unlimited number of accounts for Free tier.

The Pro version has more features such as 100+ install scripts, Reseller Accounts, User limits (Bandwidth, CPU, Memory...), More Stats and other features aimed at people who run businesses with Virtualmin. Pro customers can also file support tickets.

The Pro tiers are limited by the number of domains you want. Domains and Sub-Domains count towards this total.

|

| Server and Package Updates |

CLI / GUI |

| Automatic Updates |

√ |

| Can be Uninstalled |

√ |

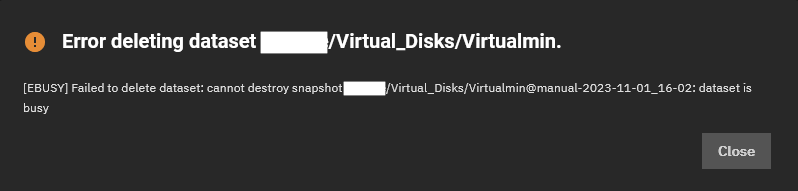

Virtualmin is a Webmin module for managing multiple virtual hosts through a single interface, like Plesk or cPanel. It supports the creation and management of Apache or Nginx virtual hosts, BIND DNS domains, MariaDB databases, and mailboxes and aliases with Postfix or Sendmail. It makes use of the existing Webmin modules for these servers, and so should work with any existing system configuration, rather than needing it’s own mail server, web server and so on.

Virtualmin can also create a Webmin user for each virtual server, who is restricted to managing just his domain and its files. Webmin’s existing module access control features are used, and are set up automatically to limit the user appropriately. These server administrators can also manage the mailboxes and mail aliases in their domain, via a web interface that is part of the module.

Virtualmin is a powerful and flexible web hosting control panel for Linux and BSD systems. Available in an Open Source community-supported version, and a more feature-filled version with premium support, Virtualmin is the cost-effective and comprehensive solution to virtual web hosting management. And, Virtualmin is the most popular and most comprehensive Open Source control panel with over 150,000 installations worldwide.

- Pros

- Very easy to install

- Updated often

- Very Stable

- Feature rich

- Covers all aspects of Linux server

- Can send push messages through your browser

- Can configure Apache modules in the GUI

- Can backup configuration

- Can backup files

- Can be expanded with plugins

- Heavily tested

- The most Feature rich of the Free and some of the Paid panels

- Email Greylisting

- Support Multi-PHP

- Supports Apache and Nginx out of the box.

- phpMyAdmin can be installed by a script (per account)

- Lots of documentation and is well written

- Very active community

- This has much more control over the server than any of the other panels.

- The interface and all of its CLI commands are Perl.

- Making it hard to kill your server.

- It is not relient on any of the services it controls.

- Each domain gets it's own separate resources.

- Extremely active and passionate development team.

- Cons

- You need some Linux experience to use this.

- You cannot select the version of MariaDB installed. The latest is installed. This is a Linux distro issue and can be changed manually.

- phpMyAdmin can only be installed on a per account basis using the install script. This leaves it open to the internet. It should run on a different port or be protected using the session ID.

- The Pro tiers are limited by the number of domains you want. Domains and Sub-Domains (Sub-Servers) count towards this total.

- Because of the theme and the layout, this is not for the casual user, many options all over the place and you should not let your end-client login.

- This is being improved constantly.

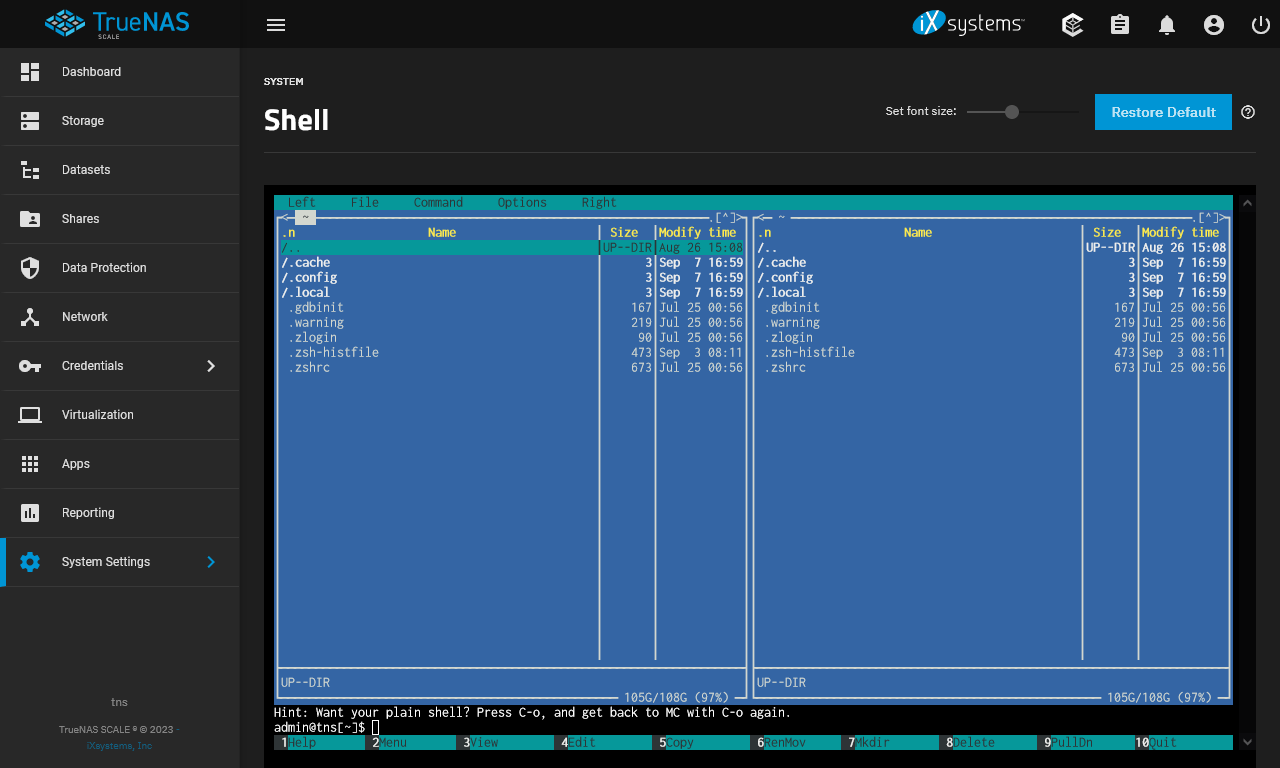

Notes

A lot of Webmin tutorials and information will apply to Virtualmin because Virtualmin is a plugin/module of Webmin so you should also check the Webmin section for information.

- Sites

- General

- Settings

- Greylisting

- Greylisting using Postgrey can now be setup using Virtualmin, via the new Email Greylisting page. In addition, whitelists for SMTP servers and email recipents can be managed.

- This feature was ported from the Pro version.

- VIrtualmin --> Email Messages --> Email Greylisting

- Plugins

- File Locations / Repo Locations / Key Locations

- Virtualmin - Shared!

- Virtualmin Professional

- Virtualmin GPL

- GPG Keys

- Install

- Install CSF (ConfigServerFirewall)

- If you have an administrative user with sudo ALL privileges, commonly the first user on an Ubuntu system, you can use that user to login to Virtualmin.

- Downloading and Installing Virtualmin – Virtualmin | Official method)

- Virtualmin Professional – Virtualmin - Virtualmin GPL is already an extremely powerful and flexible virtual hosting control panel, so we're frequently asked about the differences between Virtualmin GPL and Virtualmin Professional. So, if you were wondering whether you should upgrade, you've come to the right place.

- Once you hve downloaded the install script you can run the script with a help switch`install.sh --help` and it will give you help information and will not install anyting or modify your system.

- Automated Virtualmin Installation – Virtualmin

- There are two methods for installing Virtualmin. The first is a fully automated script described in this document, and the other is a manual installation documented in the Manual Virtualmin Installation page.

- This is the recommended method.

- Manual Installation – Virtualmin

- Unlike the Automated Virtualmin Installation, to make use of this installation type, your OS does not need to be freshly installed, nor does it need to be a supported operating system.

- This method, however, requires significantly more knowledge on the part of the person doing the installation, and a much larger time investment to insure that all necessary configuration is performed and all Virtualmin managed services are working correctly.

- The install will appear to get stuck but it is just slowly downloading assets. In total the installation took about 20mins.

- Should put certificates in the /etc/ rather than the custoemrs home directory?

- The MySQL module installs MariaDB

- Update / Upgrade

- Uninstall

- Installation Instructions

- Misc

- Convert from Apache to Nginx

- Install Virtualmin + Nginx

- HTTP/2

- HTTP/2 (Nginx)

- To edit virtual host

- Webmin --> Servers --> Nginx Webserver --> Virtual Hosts --> <your domain> - The HTTP2 option is here

- Apply settings

- This edits: /etc/nginx/sites-enabled/virtualmin.mydomain.com.conf

- Create http2 nginx virtual server - Help! (Home for newbies) - Virtualmin Community

- Virtualmin Nginx module configuration page (button with cog icon on the top left) has an option for it to be enabled by default.

- SPF / DKIM / DMARC

- Docker (via Cloudmin)

- Setting Up Docker Virtualization – Virtualmin

- Docker is not a true virtualization type like KVM, Xen or even LXC - instead it is a very lightweight container system that is typically used to run server processes in an environment that includes all their dependencies. Docker images normally contain a very basic Linux distribution and the files needed to run a single server like Apache, Nginx or MySQL.

- Webmin Docker module - An easy way to deploy containers - Webmin Docker module allows to create and manage Docker containers easily. It involves setting up a Docker Host, adding docker images, etc.

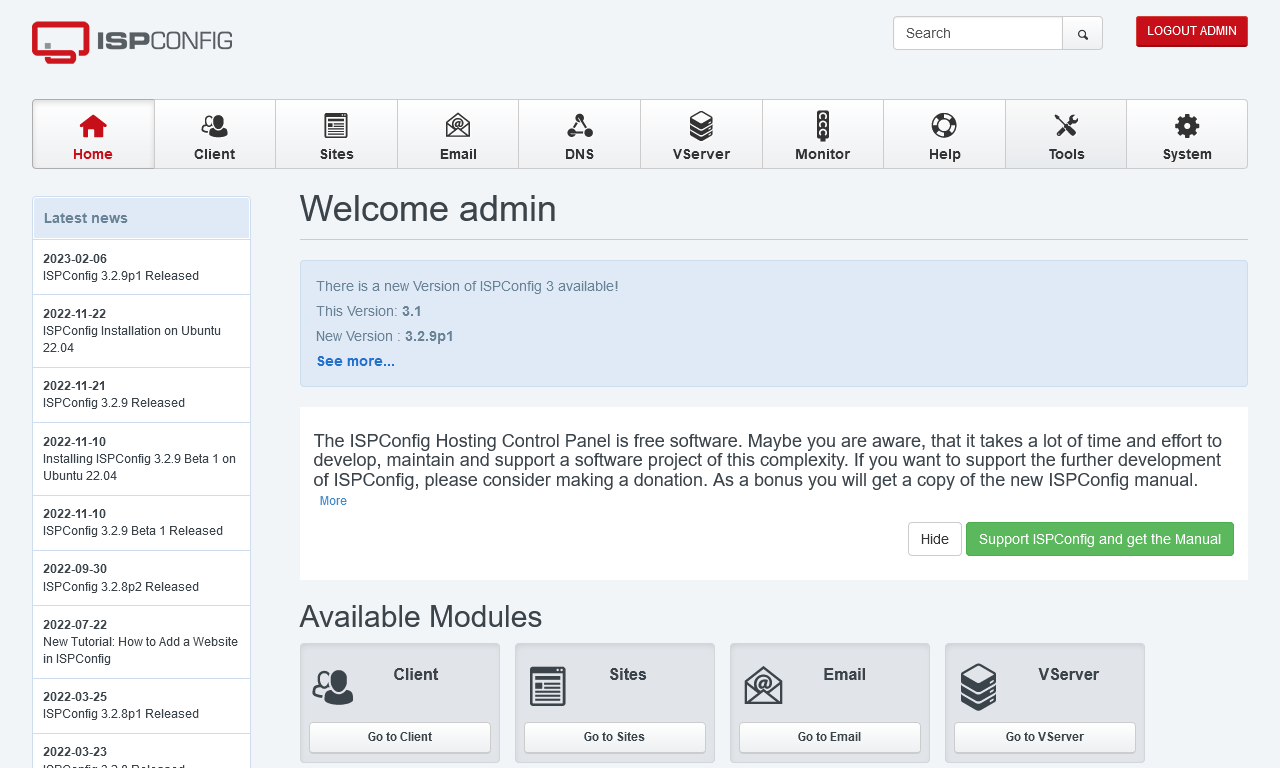

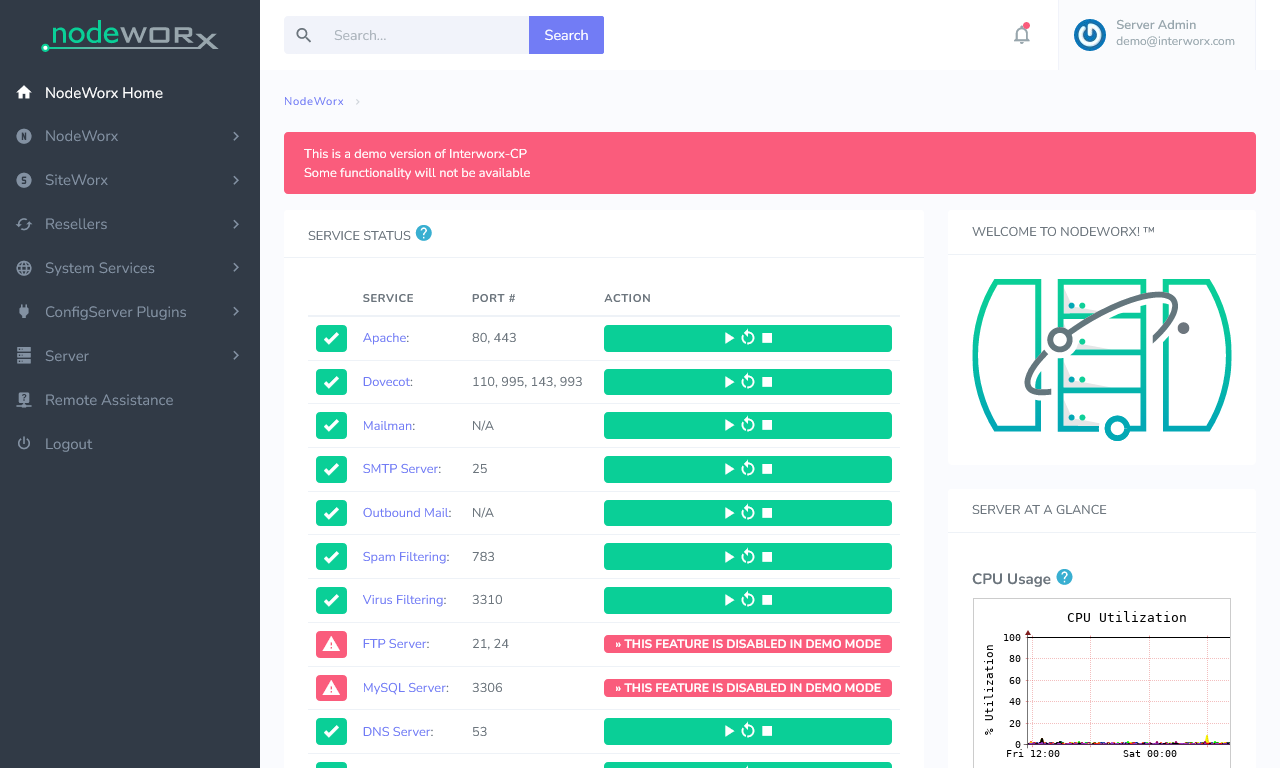

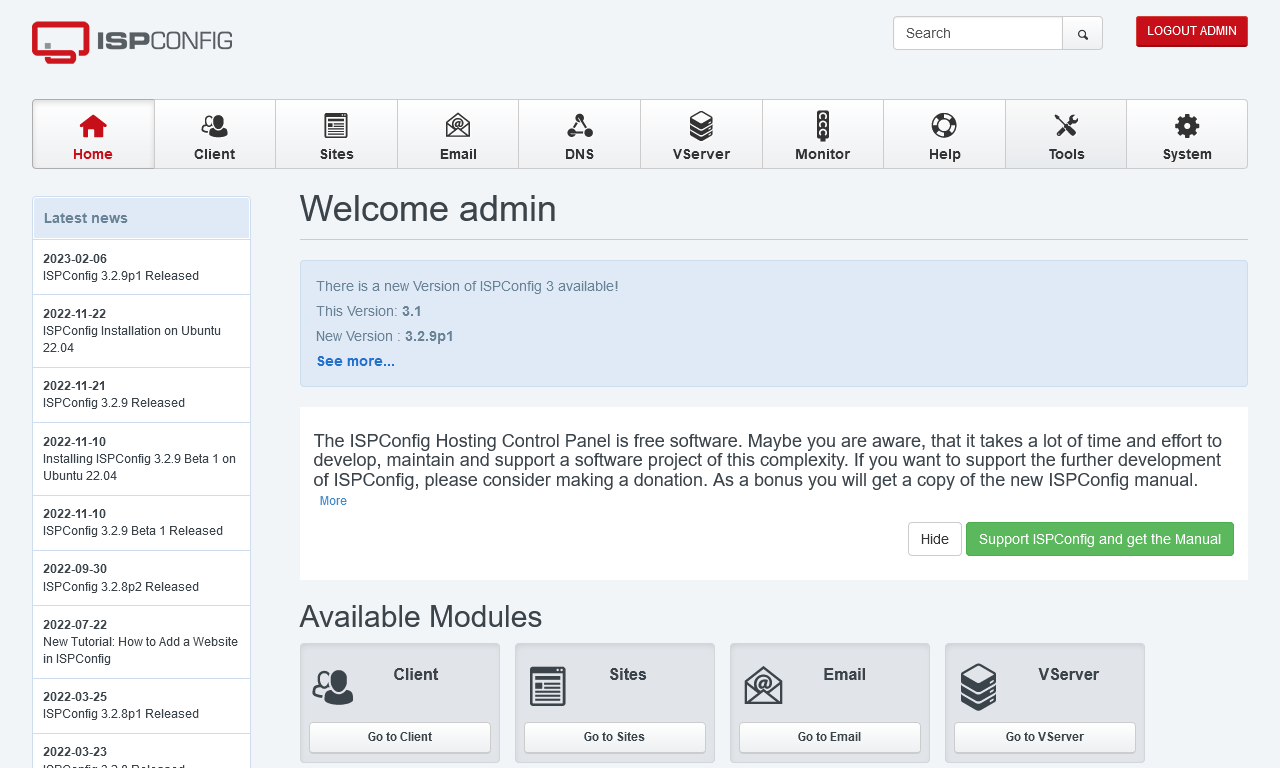

ISPConfig

| Features |

Status |

| |

|

| Primarily Designed For |

Small Hosting Company |

| Free/Paid |

Free |

| License |

BSD 3-Clause |

| Supported OS |

CentOS / Debian / Ubuntu |

| Supported Cloud Providers |

× |

| Install Method(s) |

Script |

| Web Console |

|

| |

|

| Virtualization |

OpenVZ |

| Web Server |

Apache / Nginx |

| TLS 1.3 |

√ |

| HTTP/2 |

√ |

| HTTP/3 & QUIC |

× |

| AutoSSL |

LetsEncrypt |

| DNS Server |

BIND / PowerDNS |

| DNS Zone Manager |

√ |

| DNSSEC |

√ |

| Multi-PHP |

√ |

| Database Server |

MySQL / MariaDB |

| Database Admin |

phpMyAdmin (not native) |

| Email Server |

Postfix / Dovecot |

| Webmail |

Roundcube / SquirrelMail / Exchange |

| FTP Server |

Pure-FTPd |

| Caching |

? |

| |

|

| Email Validation |

SPF / DKIM / DMARC |

| Spam Protection |

SpamAssassin / Rspamd / Amavis / Greylisting |

| Firewall |

√ |

| WAF |

× |

| Virus / Malware Scanning |

ClamAV / RKHunter / ISPProtect (Paid Addon) |

| |

|

| Reseller Accounts |

√ |

| User Accounts |

√ |

| Separate Panels (Admin / Users) |

× |

| Hosting Packages |

√ |

| Quotas |

Disk / Bandwidth |

| Traffic Statistics |

AWStats / Webalizer / GoAccess |

| Cron Jobs |

√ |

| Local Backup |

√ |

| External Backup |

? |

| File Manager |

× |

| |

|

| Extendable by Plugins |

√ |

| API |

√ |

| WHMCS Support |

√ |

| Panel Account Restrictions |

× |

| Server and Package Updates |

GUI |

| Automatic Updates |

√ |

| Can be Uninstalled |

× |

ISPConfig is an open source hosting control panel for Linux which allows website owners to easily administer their sites, similar to cPanel and Plesk. It also allows resellers to manage multiple accounts on multiple physical or virtual servers from one control panel. This panel has a lot of potential when it removes the need for manually installing the basics such as phpMyAdmin and a filemanager.

- Pros

- Can grab email from a remote email mailbox.

- Manage multiple servers from one control panel.

- Single server, multiserver and mirrored clusters.

- Virtualization

- This panel has a lot of technology added.

- The layout is very staright forward and easy to use.

- OpenVZ – allows virtual machines for client sites.

- Cons

- Some things have to be installed manually.

- The plugin system needs to be inproved (not verified this)

- The panel theme is a bit dated.

Notes

- Sites

- General

- Settings

- The SPF/DKIM/DMARC settings tabs in the DNS zone are really easy to use.

- phpMyAdmin

- Install phpMyAdmin (Instructions below not verified)

- Login as admin in ISPConfig, go to Management -> Update Manager -> Update

- install the phpMyAdmin package from www.ispconfig.org ( http://www.ispconfig.org/downloads/phpmyadmin-2.6.4-pl3.pkg ) using Management -> Update Manager -> Update.

- Opening phpMyAdmin directly from ISPConfig - Set the URL to your phpmyadmin installation under (System --> Interface config)